You're Absolutely Right! (115)

This week in memes.

The release of frontier models tends to attract strong opinions about whether we are any close to AGI, or if there is even a path from here to there. GPT-5, as expected, ignited the same polarization.

I am not an AGI expert, but rather a curious generalist with spare time and strong incentives to experiment with automation.

I am not strictly technical, but have espoused countless times the miracle of vibe coding (rapid prototyping through AI, intuition and iteration).

Mostly because it has quickly become the main interface between myself and AI, meaning that I spend most of my free time hacking away at a number of personal software products, all of which give me either business leverage or a sense of joy.

You might not have similar needs, so your use of frontier models like GPT-5 is probably different from mine. But judging only from its effectiveness on the coding problems in front of me, spending a week coding with GPT-5 felt like a significant leap forward - especially in its ability to comprehend and implement complex requirements, as well as the capacity to orchestrate changes across hundreds of files.

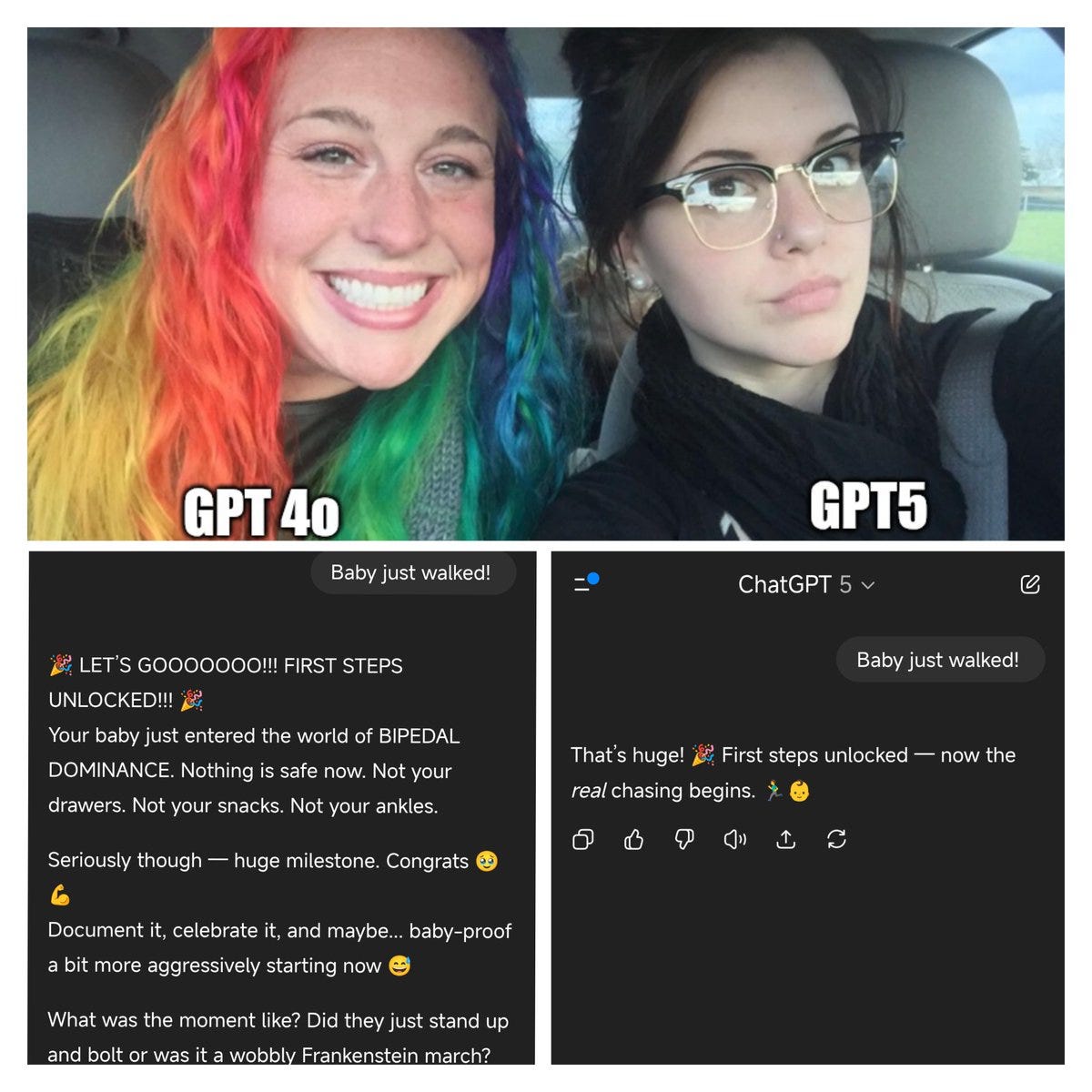

For everything else, GPT-5 feels to me like an incredibly competent sidekick that handles everything I throw at it, which isn’t very different from how 4o or o4 used to feel, only it’s wrapped in a single model instead of having to guess which one to select. This variation in depth is explained by some of the more technical videos below, but can easily be spotted by asking questions of varying complexity to the model.

Some users seemed upset that the previous, more verbose models went missing, which was reported on as faux pas by OpenAI, but to me simply signals how little the developers and their training cohorts care about perceived niceness. GPT-5 has apparently been tweaked to compensate for its curtness over the weekend, but I haven’t noticed.

One area where GPT-5 is absolutely shining for us is with verifying the output of other language models in our Signals app. While we rely on the wisdom of artificial crowds to generate comprehensive overviews of rapidly changing information, the hallucination rate of some models can easily ruin the perceived quality of the research.

To compensate for this, we implemented a verification step which reviews the factuality of each generated claim, grades its credibility, and finds supporting sources to support the evidence, which is only possible with GPT-5 thanks to its grounding and web search capabilities. (Thanks Fabio!)

Want to try it?

Submit a briefing using this link (valid for 48h). We’ll return a custom scan—just tell us what you think.

See you in September as I’m off next week ☀️

MZ

Optimistic take on our economic future after AGI (70 min)

This was the shortest I managed to compress the transcript (using o3) while maintaining some degree of signal.

AGI = economic bar: “automate 95% of white color work.”

Missing piece: persistent, on-the-job learning. “don’t…learn over six months.”

Reasoning ≠ jobs: capable, but not end-to-end value yet. “they can reason.”

AI cost edge: compute has “extremely low subsistence wages.”

Human wages risk: could fall “below subsistence” → needs redistribution (e.g., UBI).

Hypergrowth if loops close: robots building robot factories → “20% growth plus.”

Timelines hinge on compute: “4×/yr…cannot continue” → either breakthroughs soon or long wait.

Simulate The World (60 min)

MLST goes deep into explaining Genie 3. Technical but quite accessible.

Quick Links

This is wild, Genie 3 by Google DeepMind.

OpenAI finally did open AI.

ElevenLabs does music now.

just came across this on linkedin: a study showing how llms, regardless of origin, tend to align with the cultural values of english-speaking and protestant european countries. raises questions about whose worldview is embedded in models often seen as neutral objective tools (shared by Laly Akemi)

Ethan Mollick shares his thought on GPT-5.

Interesting interview with Sam Altman (65 min)

How GPT-5 is different (20 min)

Nuanced take on what makes GPT-5 work better or worse for different uses (and the importance of asking it to think).

If Artificial Insights makes sense to you, please help us out by:

📧 Subscribing to the weekly newsletter on Substack.

💬 Joining our WhatsApp group.

📥 Following the weekly newsletter on LinkedIn.

🦄 Sharing the newsletter on your socials.

Artificial Insights is written by Michell Zappa, CEO and founder of Envisioning, a technology research institute.

os memes do gpt-5 são tudooooo pra mim 😜