There Is No Wall (084)

Spending the holidays online so you don't have to.

Happy Holidays and welcome to your weekly gift wrapped insights about the collective revolution we are experiencing.

This week is light on personal commentary and heavy on curated links to help you make sense of the news. I am spending most of my holiday downtime coding. Of all things I could be doing (with or without AI), programming feels like the highest-impact activity of all. Both in terms of learning & enjoyment as well as the potential of these creations out the wild.

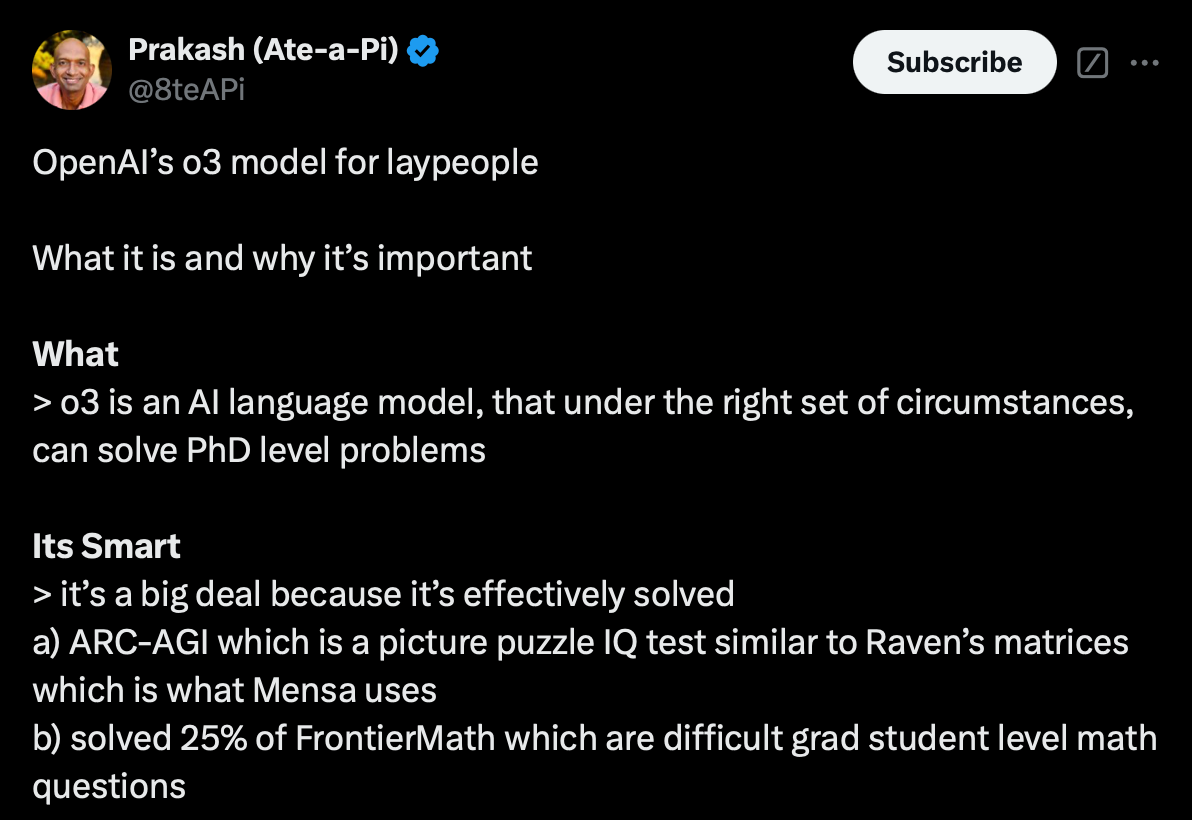

The AI news cycle is incessant, so I expect we’ll keep seeing interesting releases during the otherwise slow months of northern winter. OpenAI finished their 12 days of shipmas last Friday with O3, their new state-of-the-art, chain-of-thought model. O3 scores remarkably high on the ARC-AGI challenge, which means it has a high general intelligence quotient, albeit at a tremendous financial cost of upwards to thousands of dollars per run.

It’s interesting how a spectrum of potential price points emerge, where certain solutions are extremely time-sensitive whereas others can take an arbitrary amount of time (and money), as long as they eventually get the answer right.

Wishing you quiet days,

Michell

“When someone says AI doesn't deliver business value” (Thanks Bianca!)

Building Effective AI Agents (long read)

Wonderful toolkit from Anthropic. My own agentic workflows are chains of Python scripts working somewhat in consort with marginal autonomy.

Yann LeCun addresses the United Nations (7 min)

Could not find it on YouTube, only Reddit. Worth a listen.

Everything you need to understand O3

Simulated reasoning on the rise.

According to OpenAI, the o3 model earned a record-breaking score on the ARC-AGIbenchmark, a visual reasoning benchmark that has gone unbeaten since its creation in 2019. In low-compute scenarios, o3 scored 75.7 percent, while in high-compute testing, it reached 87.5 percent—comparable to human performance at an 85 percent threshold.

During the livestream, the president of the ARC Prize Foundation said, "When I see these results, I need to switch my worldview about what AI can do and what it is capable of."

Gary Marcus helps calibrate expectations.

WSJ says GPT-5 is over budget and behind schedule:

The Year in GTP Wrappers (38 min)

One of my favorite podcasts on why GPT wrappers might actually be fine.

Expanding your mind about what "intelligence" means, in a way, is keeping with the "AI" subject, in my humble opinion. (Kika)

"Stop thinking of AI as doing the work of 50% of the people. Start thinking of AI as doing 50% of the work for 100% of the people" (Jensen Huang via Henry)

Amazon support gets the balance wrong

TLDraw Computer

I love interesting AI UX.

Whisk, an Image Remixer by Google Labs (52 sec)

Another good way to remix images together. Less words only images. (Thanks Alex!)

Structured outputs for reliable applications (40 min)

OpenAI. This is so relatable & useful.

Substack recommendations

If Artificial Insights makes sense to you, please help us out by:

📧 Subscribing to the weekly newsletter on Substack.

💬 Joining our WhatsApp group.

📥 Following the weekly newsletter on LinkedIn.

🦄 Sharing the newsletter on your socials.

Artificial Insights is written by Michell Zappa, CEO and founder of Envisioning, a technology research institute.

You are receiving this newsletter because you signed up on envisioning.io or Substack.