The Future is Global (089)

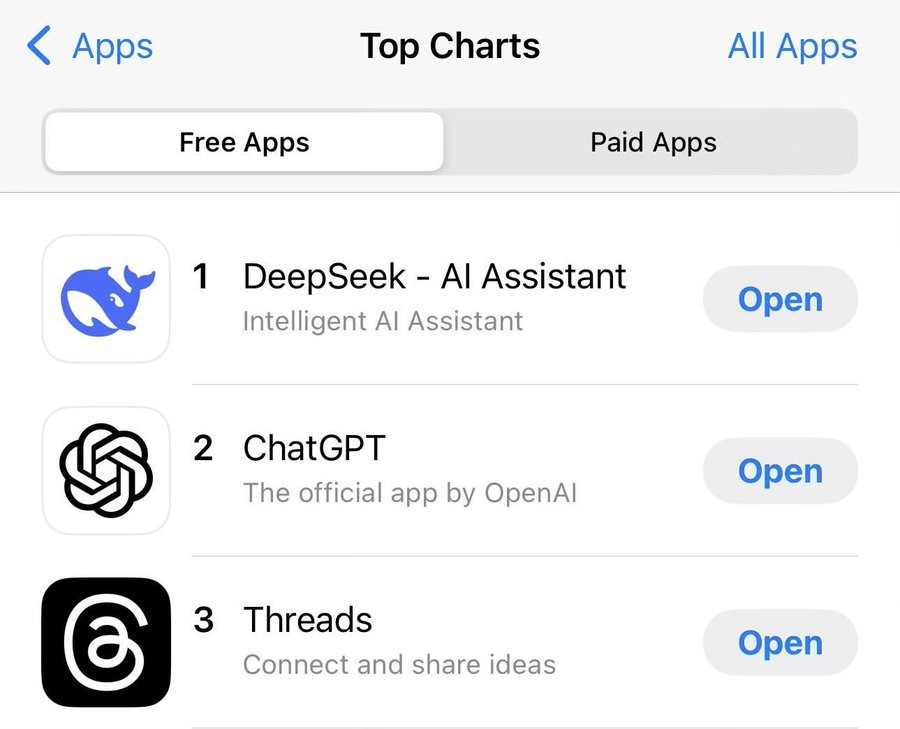

How do you compete with free?

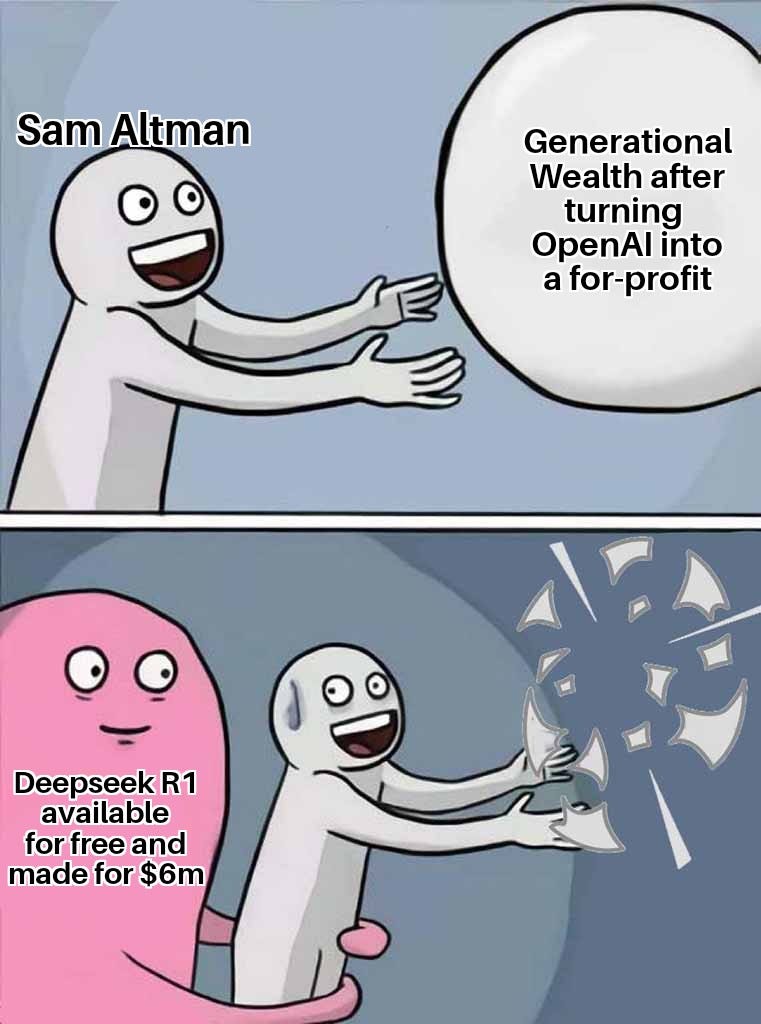

What happens when your entire value proposition is challenged by an approach that nobody really saw coming? What if the reason you are raising half a trillion dollars is based on the premise of costly computation and expensive experts only your industry has access to?

Until a week ago, most of the AI world revolved around the deeply held belief that the state of the art was tightly correlated with the high training costs associated with cutting edge GPUs, which naturally meant models should be closed-source and available only with subscriptions and API costs.

Turns out, these beliefs have arguably been proven false.

Instead of needing billions of dollars to train a leading model, the Chinese quantitative stock trading firm called High Flyer launched DeepSeek proving you only need a few million. Not only are their new V3 and R1 models better than O1 and Claude 3.6 Sonnet, but they also cost a fraction to run via API and are fully available for download as open source, open weights and published papers.

You can test it on DeepSeek.com or read some of its creative prose to get a sense of how incredible it is.

Personally, I have been using R1 in Cursor the last few days and am blown away by its reasoning capability. Instead of jumping straight into solution mode (like O1 and Sonnet) it first <thinks> about the problem, in clear view of the user, before proposing the requested code snippets. It doesn’t always get the solution right, but it has become an invaluable complement to my development approach. The fact that I could be running the model locally is just a cherry on top.

While we all grapple with what is happening, I have collected a bunch of threads, memes and articles below to help you make sense of what will clearly be a turbulent and exciting year.

Until next week,

MZ

P.S. 1: We just launched a Lisbon meetup group on our WhatsApp. Join if you want to meet the community in person.

P.S. 2: Are you looking for speakers on AI? We have some incredible talent in the community and it could be nice to organize an exchange.

DeepSeek Primer

@resters (HN)

For those who haven't realized it yet, Deepseek-R1 is better than claude 3.5 and better than OpenAI o1-pro, better than Gemini. It is simply smarter -- a lot less stupid, more careful, more astute, more aware, more meta-aware, etc. We know that Anthropic and OpenAI and Meta are panicking. They should be. The bar is a lot higher now. The justification for keeping the sauce secret just seems a lot more absurd. None of the top secret sauce that those companies have been hyping up is worth anything now that there is a superior open source model. Let that sink in.

@tictoctick (X)

DeepSeek is like buying the most expensive house in the neighborhood for 10 million and a guy next month buys similar house next door for 200K. Very few understand this means but they will soon feel it.

@TKL_Adam (X)

OpenAI was founded 10 years ago, has 4,500 employees, and has raised $6.6 billion in capital. DeepSeek was founded less than 2 years ago, has 200 employees, and was developed for less than $10 million. How are these two companies now competitors?

@snowmaker (Jared Friedman - who is also in the YC podcast below)

Lots of hot takes on whether it's possible that DeepSeek made training 45x more efficient, but @doodlestein wrote a very clear explanation of how they did it. Once someone breaks it down, it's not hard to understand. Rough summary:

Use 8 bit instead of 32 bit floating point numbers, which gives massive memory savings

Compress the key-value indices which eat up much of the VRAM; they get 93% compression ratios

Do multi-token prediction instead of single-token prediction which effectively doubles inference speed

Mixture of Experts model decomposes a big model into small models that can run on consumer-grade GPUs

@wordgrammer (X)

I spent the day learning exactly how DeepSeek trained at 1/30 the price, instead of working on my pitch deck. The tl;dr to everything, according to their papers:

Q: How did DeepSeek get around export restrictions?

A: They didn’t. They just tinkered around with their chips to make sure they handled memory as efficiently as possibly. They lucked out, and their perfectly optimized low-level code wasn’t actually held back by chip capacity.Q: How did DeepSeek train so much more efficiently?

A: They used the formulas below to “predict” which tokens the model would activate. Then, they only trained these tokens. They need 95% fewer GPUs than Meta because for each token, they only trained 5% of their parameters.Q: How is DeepSeek’s inference so much cheaper?

A: They compressed the KV cache. (This was a breakthrough they made a while ago.)Q: How did they replicate o1?

A: Reinforcement learning. Take complicated questions that can be easily verified (either math or code). Update the model if correct.

Explainer: What's R1 & Everything Else?

Don’t miss Tim Kellogg’s superb overview of reasoning models and R1.

Is AI making you dizzy? A lot of industry insiders are feeling the same. R1 just came out a few days ago out of nowhere, and then there’s o1 and o3, but no o2. Gosh! It’s hard to know what’s going on. This post aims to be a guide for recent AI develoments. It’s written for people who feel like they should know what’s going on, but don’t, because it’s insane out there.

NYT: How Chinese A.I. Start-Up DeepSeek Is Competing With Silicon Valley Giants

Unlocked article about DeepSeek.

The company built a cheaper, competitive chatbot with fewer high-end computer chips than U.S. behemoths like Google and OpenAI, showing the limits of chip export control.

The Path to AGI (1h)

Excellent interview with Demis Hassabis. Don’t miss it.

AI today is overhyped in the short term but massively underappreciated in the long term—what’s coming will be truly transformative.

Claude and the Future of Work (35 min)

Pretty good interview with Dario Amodei of Anthropic by the WSJ in Davos

Surprisingly, they are not working on image generation.

As AI gets better, critical thinking will become one of the most valuable skills—people need to question what they see more than ever.

YC Podcast of the week (40 min)

I’m a fan of the YC Lightcone Podcast and will always recommend great episodes like this one. Excellent insight into where startup money is going.

AI and Decision-Making

Smart conversation with Ray Dalio at Davos AI House.

The future is unknown and unknowable. The real skill is learning how to deal with what you don’t know.

If Artificial Insights makes sense to you, please help us out by:

📧 Subscribing to the weekly newsletter on Substack.

💬 Joining our WhatsApp group.

📥 Following the weekly newsletter on LinkedIn.

🦄 Sharing the newsletter on your socials.

Artificial Insights is written by Michell Zappa, CEO and founder of Envisioning, a technology research institute.

You are receiving this newsletter because you signed up on envisioning.io or Substack.