Talking to Ourselves (056)

Your weekly download of actual intelligence.

Happy Monday and welcome to your weekly overview into who is putting AI where.

In issue 054 we spoke about Google’s challenge dealing with the “Jagged Edge” of expectations in AI (in how infusing generative responses to your search can seriously damage your credibility). Each human seems to display an inner model which shapes our expectations about how other intelligences are supposed to behave.

This mismatch between how we want AIs to perform and how they actually respond to us is maybe the biggest opportunity/threat when it comes to determining how we actually use these systems, individually and collectively.

When Apple positions Siri as knowledgable and capable, we create a mental model of what it should be able to perform for us. When it then fails and falters with what feels like basic requests, we start doubting the capacity of the system as a whole.

ChatGPT took the world by storm in 2023 because we had low expectations about chatbots while it wildly outperformed our mental model for what a chat should be capable of. Same as AlphaGo outplaying Lee Sodol in 2016 and DeepBlue Kasparov in 1997.

Whatever Apple launches today at WWDC will surely represent a big step forward in terms of capability, and maybe it’s enough to start changing our minds about AIs potential.

To learn more about the open-source masterclass we’re working on, make sure to join our WhatsApp group.

Until next week,

MZ

How can we possibly delve on our own?

Open letter from researchers at OpenAI and Google DeepMind

AI companies possess substantial non-public information about the capabilities and limitations of their systems, the adequacy of their protective measures, and the risk levels of different kinds of harm. However, they currently have only weak obligations to share some of this information with governments, and none with civil society. We do not think they can all be relied upon to share it voluntarily.

This feels significant

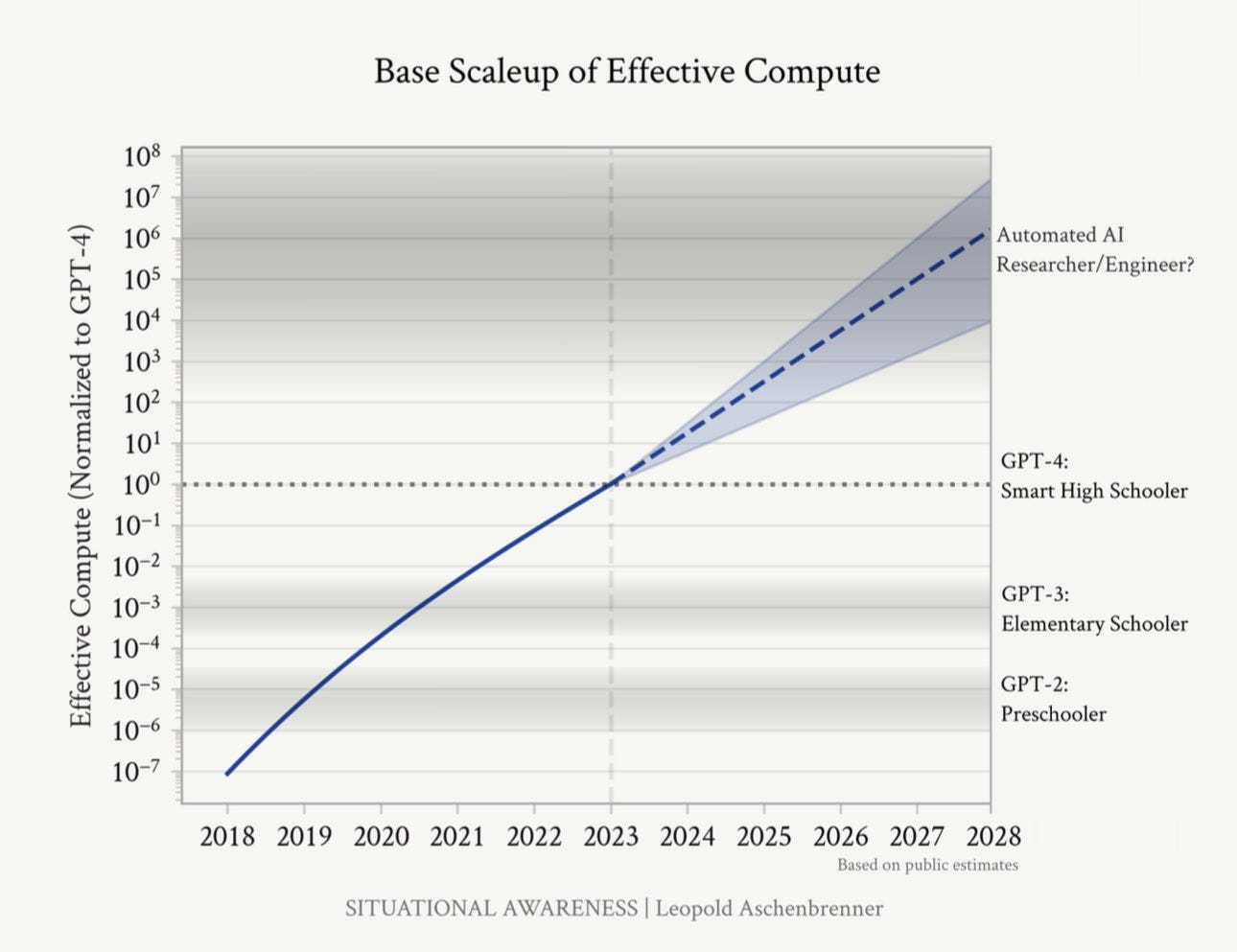

The speculative graph from Leopold Aschenbrenner’s paper was all over X last week.

Understanding and Governing AI

Short and sweet lecture about governing AI by Helen Toner, former OpenAI board member. Super insightful and well rounded arguments, with a couple of funny jabs about her departed position.

Researchers sometimes describe deep neural networks, the main kind of AI being built today, as a black box.

Extracting concepts from GPT-4

Article by OpenAI explaining how they built methods to decompose GPT-4's internal representation into interpretable patterns which is similar to Anthropic's paper on Mapping the Mind of an LLM from a few weeks ago.

Both articles discuss approaches for understanding internal representation in LLMs through interpretability techniques, by mapping "patterns" or features of neuron activation which correspond to human-interpretable concepts.

Confronting the Unknown

Wharton business professor Ethan Mollick is one of my favorite thinkers in the generative space. Here's a fun video by Big Think on 4 ways of thinking about future AI.

AI is here to stay. That is something that you get to make a decision about how you want to handle, and to learn to work with, and learn to thrive with, rather than to just be scared of.

Fascinating deep dive into how OpenAI parses image data, where the author performs reverse engineering to figure out the likely approach used to convert imagery to tokens and vector data.

Generative AI handbook - a comprehensive guide to learning about state of the art AI systems (by William Brown).

Lots of insights into possible business models and how to compete with OpenAI, via YC.

Anthropic’s Claude is apparently betting on explainable “personalities” or character models for their LLM. Audio version.

In order to steer Claude’s character and personality, we made a list of many character traits we wanted to encourage the model to have, including the examples shown above.

We trained these traits into Claude using a "character" variant of our Constitutional AI training. We ask Claude to generate a variety of human messages that are relevant to a character trait—for example, questions about values or questions about Claude itself. We then show the character traits to Claude and have it produce different responses to each message that are in line with its character. Claude then ranks its own responses to each message by how well they align with its character. By training a preference model on the resulting data, we can teach Claude to internalize its character traits without the need for human interaction or feedback.

We have made stickers and other goodies for all newsletter readers who want them!

If you’d like some merch in your mailbox, please share your postal address using this form. (Supplies are limited)

If Artificial Insights makes sense to you, please help us out by:

📧 Subscribing to the weekly newsletter on Substack.

💬 Joining our WhatsApp group.

📥 Following the weekly newsletter on LinkedIn.

🏅 Forwarding this issue to colleagues and friends.

🦄 Sharing the newsletter on your socials.

🎯 Commenting with your favorite talks and thinkers.

Artificial Insights is written by Michell Zappa, CEO and founder of Envisioning, a technology research institute.

You are receiving this newsletter because you signed up on envisioning.io or Substack.