Systems that understand context and take meaningful actions (090)

Agents and the people they operate.

Happy Monday and welcome to your weekly overview of AI bits that caught my attention.

Thanks to the explosion of capabilities around software development with AI, I am now spending most my waking hours coding away at various apps and systems. This would have been impossible only a year ago, and the sense of agency this has given me is difficult to even estimate. Not only are these apps built using language models, but they are also employing natural language intelligence thanks to extensive use of APIs and agentic workflows.

One of this apps is a research assistant which currently performs a horizon scan for any given organization, based on generative input from half a dozen different language models, then consolidates and estimates various impact scores using other models. It’s completely web based, and meant to give our customers at Envisioning a better starting point when collecting data for visualization. Will do a public showcase soon.

The other is an iOS version of the Purpose experience published on NavigateWithin.com last year. The web version is fully functional, but over the holiday break I decided to experiment with native app development and realized it is a much better fit for personal development. The app is designed to offer users a handful of experiences augmented by language models, which will hopefully help you understand yourself better the more you use it. The iOS version is soon going on Test Flight soon, so please reply with your iCloud email address if you want to become an early tester.

What these experiments have in common is: anyone with enough patience and $20/month for a Cursor subscription can now build apps. You need a solid understanding about the foundations of software development, but the days of crafting code line by line are in many ways over. My experience has been experimental but successful. I have achieved alone in weeks what might have taken a small team a few months using traditional approaches. None of these are production-ready, but they each work as a solid prototype or foundation for building something more elaborate on top of.

If learning some of these skills sounds valuable, please let me know. I am preparing a series of workshops which would help non-coders learn the principles of building with AI and launching at least one personal project. I haven’t set a date for the first cohort but you’ll be the first to know when it goes live.

Meetups

I am aligning personal travel plans with opportunities to meet as many of you as possible. Join the WhatsApp groups to help coordinate and save the following dates if you’re around:

Amsterdam: Week of March 10 (Exact date TBD). Derek & Laly are helping me organize a show & tell of personal AI projects so please let us know in the group if you have something to share!

London: March 21 or 22 (I’m flexible and we can coordinate on WhatsApp).

Lisbon: April 29 (Before FutureDays) with the help of Emma & Pedro.

São Paulo: Week of May 12, exact date TBD.

Until next week,

MZ

AI guidelines from The Vatican

ANTIQUA ET NOVA: Note on the Relationship Between Artificial Intelligence and Human Intelligence.

Simon Willison on Hacker News highlighted these interesting bits on idolatry:

104. Technology offers remarkable tools to oversee and develop the world's resources. However, in some cases, humanity is increasingly ceding control of these resources to machines. Within some circles of scientists and futurists, there is optimism about the potential of artificial general intelligence (AGI), a hypothetical form of AI that would match or surpass human intelligence and bring about unimaginable advancements. Some even speculate that AGI could achieve superhuman capabilities. At the same time, as society drifts away from a connection with the transcendent, some are tempted to turn to AI in search of meaning or fulfillment---longings that can only be truly satisfied in communion with God. [194]*

105. However, the presumption of substituting God for an artifact of human making is idolatry, a practice Scripture explicitly warns against (e.g., Ex. 20:4; 32:1-5; 34:17). Moreover, AI may prove even more seductive than traditional idols for, unlike idols that "have mouths but do not speak; eyes, but do not see; ears, but do not hear" (Ps. 115:5-6), AI can "speak," or at least gives the illusion of doing so (cf. Rev. 13:15). Yet, it is vital to remember that AI is but a pale reflection of humanity---it is crafted by human minds, trained on human-generated material, responsive to human input, and sustained through human labor. AI cannot possess many of the capabilities specific to human life, and it is also fallible. By turning to AI as a perceived "Other" greater than itself, with which to share existence and responsibilities, humanity risks creating a substitute for God. However, it is not AI that is ultimately deified and worshipped, but humanity itself---which, in this way, becomes enslaved to its own work. [195]*

If you haven't played around with DeepSeek's exposed chain-of-thought (reasoning) then I highly recommend it.

Agents and the path to AGI (30 min)

Build your own AGI.

With reasoning, we’re able to reach higher and higher levels of reliability—not by training bigger models, but by letting them think longer.

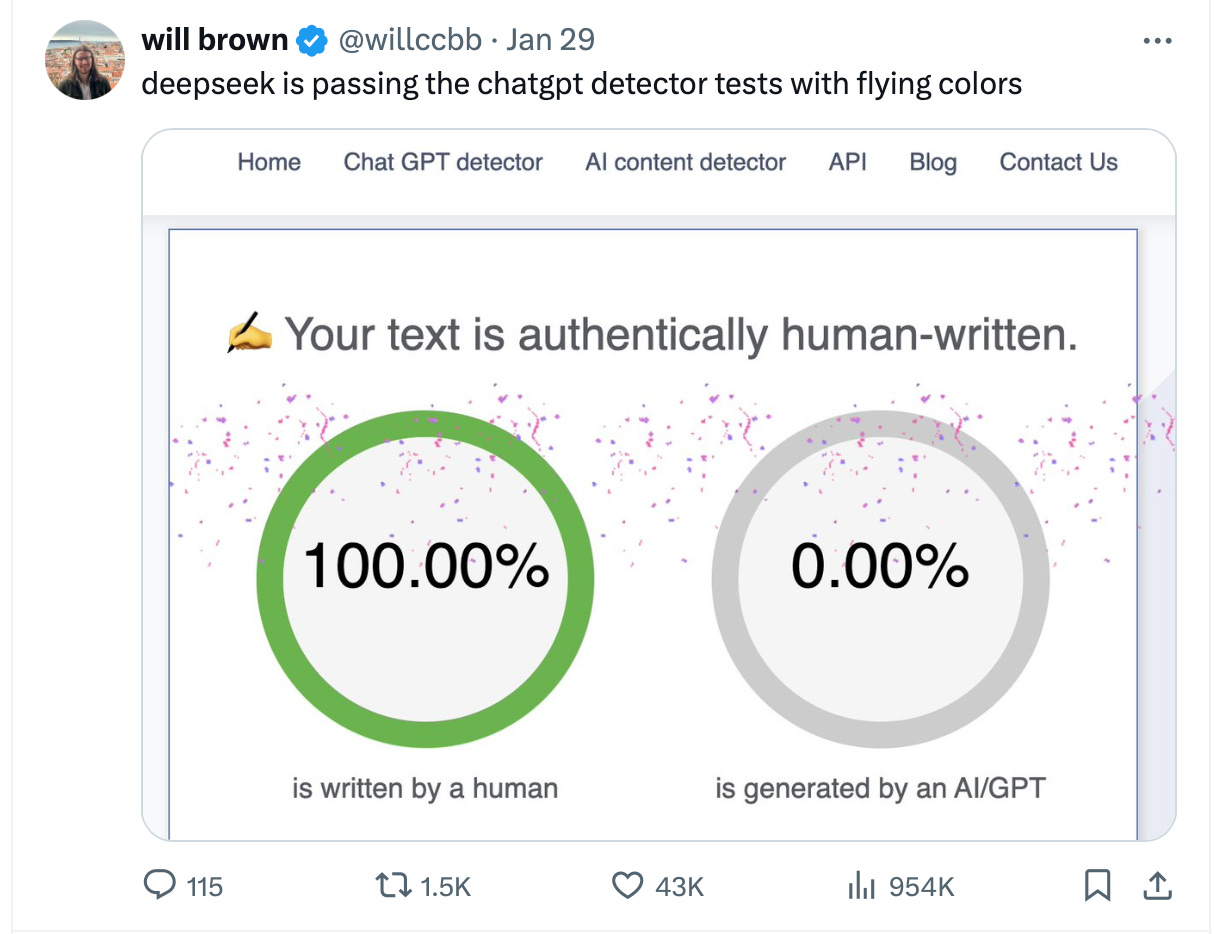

DeepSeek facts vs. hype (40 min)

Superb technical conversation with IBM researchers separating fact from fiction when it comes do DeepSeek.

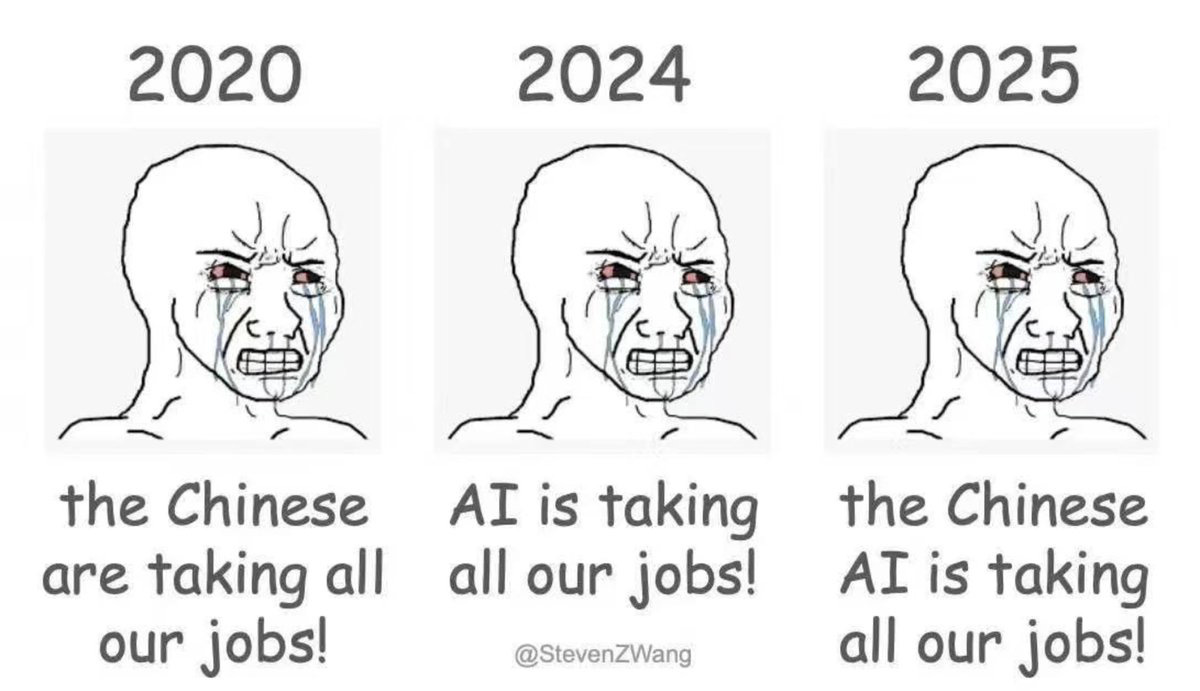

DeepSeek is essentially eroding the competitive moat that big model providers have relied on by making state-of-the-art AI open source.

DeepSeek insights

Dario Amodei offers some additional insight into how DeepSeek probably trained their V3 and R1 models, and theorizes about some of the exaggerated numbers that have been thrown around in the press.

DeepSeek does not "do for $6M what cost US AI companies billions". I can only speak for Anthropic, but Claude 3.5 Sonnet is a mid-sized model that cost a few $10M's to train (I won't give an exact number). Also, 3.5 Sonnet was not trained in any way that involved a larger or more expensive model (contrary to some rumors). Sonnet's training was conducted 9-12 months ago, and DeepSeek's model was trained in November/December, while Sonnet remains notably ahead in many internal and external evals. Thus, I think a fair statement is "DeepSeek produced a model close to the performance of US models 7-10 months older, for a good deal less cost (but not anywhere near the ratios people have suggested)".

Neural Networks and Probabilistic Learning (30 min)

Nobel prize winner Geoffrey Hinton goes deep in this lecture about artificial neural networks and why they work. Technical but worth absorbing to understand how probabilistic systems give rise to intelligence.

The amazing thing about Boltzmann machines is that there’s a very simple learning algorithm that will do something that seems impossibly complex—constructing interpretations of sensory data without explicit supervision.

What Differentiates us from Machines? (30 min)

Five fascinating perspectives from iai.

If Artificial Insights makes sense to you, please help us out by:

📧 Subscribing to the weekly newsletter on Substack.

💬 Joining our WhatsApp group.

📥 Following the weekly newsletter on LinkedIn.

🦄 Sharing the newsletter on your socials.

Artificial Insights is written by Michell Zappa, CEO and founder of Envisioning, a technology research institute.

You are receiving this newsletter because you signed up on envisioning.io or Substack.