Stranger Than You Can Imagine (088)

Why the future is more than a useful abstraction.

Our collective capacity to process change has limits.

The saying “science advances one funeral at a time” highlights how societal norms evolve only as quickly as our minds—and identities—can adapt. We cling to a shared perception of reality, constructing our self-image from the feedback of this illusion. Beneath this, we live in a superficially shared reality but inhabit infinitely complex inner worlds that crystallize by a certain age and are rarely questioned thereafter.

People are almost physically incapable of perceiving what is “really” happening. We trust experts instinctively, often without understanding what creates credibility in fields beyond our grasp. The explosion of the internet and social media has, in my opinion, left many unaware and unprepared for the mostly inevitable economic and social shifts we face in the coming decades. Formal education is most precarious when there’s either too little or too much of it. Both those without education and those whose identities are entirely shaped by these systems have the most to lose.

Profound shifts require flexibility to take hold. Rigid systems break.

Resilience against ontological shock comes from a worldview grounded in lived experience and trust that there’s more than we perceive. Materialism is a useful metaphor, but many truths—like consciousness—defy explanation.

What artificial (and other forms of) intelligence will do to us depends on how we receive it. Language models have absorbed vast amounts of text, creating interfaces that seemed magical only five years ago.

We cannot imagine what the next 5 or 50 years will bring, only that understanding the future demands comfort with uncertainty.

Until next week,

MZ

How do AI Models Think? (1h 20m)

Deep and sprawling interview on MLST with Laura Ruis, a PhD student at University College London and researcher at Cohere. Laura explains how groundbreaking research on how LLMs perform reasoning and posits several hyptheses about the likely implications of scaling on near-future capabilities. While the conversation is rather technical at times, I recommend listening to the whole interview to better understand where your own knowledge gaps are.

Using AI in your Startup (15 min)

What you should consider if you’re thinking about pivoting to AI or incorporating it into your business from one of my favorite channels (YC).

The future of companionship

Fascinating read by the NYT on falling in love with chatbots. (Unlocked link).

Dutch public TV channel NPO 3 is making a series about AI love. You can put automatic translation, and it seems pretty good. it’s documental, so quite interesting to see how the movie Her predicted this kind of centaur love. Via Rodrigo Turra!

Meme via Jurgen Gravestein.

SOTA Thinking

Gwern on O1-class models and how they are different from models like GPT-4. The whole thread is interesting.

Long Reads on Substack

Fascinating hypothesis about how next-generation private frontier models are being used to train public models.

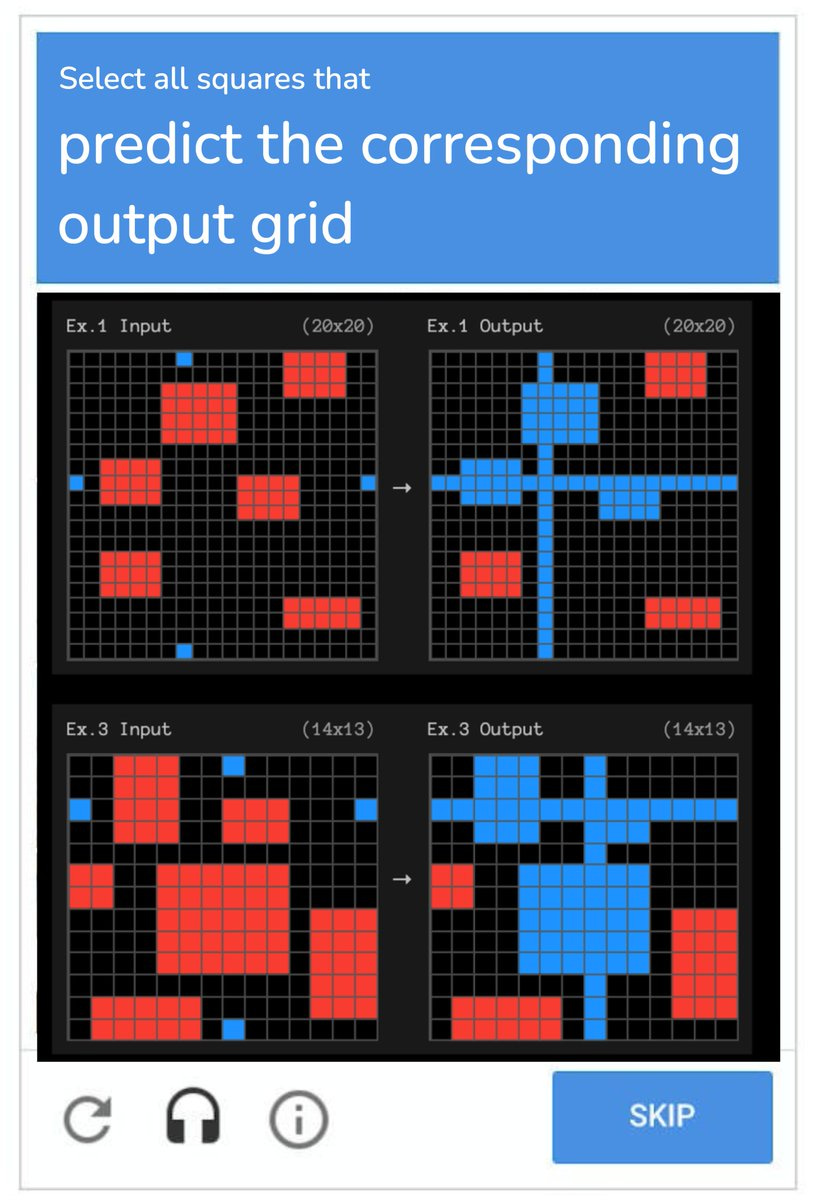

AGI CAPTCHA

If Artificial Insights makes sense to you, please help us out by:

📧 Subscribing to the weekly newsletter on Substack.

💬 Joining our WhatsApp group.

📥 Following the weekly newsletter on LinkedIn.

🦄 Sharing the newsletter on your socials.

Artificial Insights is written by Michell Zappa, CEO and founder of Envisioning, a technology research institute.

You are receiving this newsletter because you signed up on envisioning.io or Substack.

thank you for the mention!