Simulated Fire Doesn't Get Hot (082)

Your weekly countdown to better futures.

Code is the least emotional human language. Poems and novels and letters and lyrics all imbue emotion to some extent, and are thus deeply personal, while programming languages are essentially pure function. There is space for personality and opinions in code, but in terms of functions you can offload to AI, I believe it ranks close to the top.

I never had the patience to code. Despite multiple teaching attempts by my engineer father, my own level of ambition never matched my actual ability to implement what I would like, which often left me frustrated and unmotivated to deepen my own software development skills. In practice, this left me comfortable speaking the language of coders while having next to no ability to implement what was discussed – which is an ideal position when considering our newfound ability to talk machines into doing our biddings.

Despite my past inability, in the past year I have conceptualized and launched a different coding project each month. Starting with a simple web-based Tarot card reading experience with an LLM backend, to more advanced Next & React foundations of the new Envisioning website, our AI Vocab, the previously mentioned Purpose app experiment on NavigateWithin and a lot more.

In doing this, I have noticed a couple of foundational skills which might help you strengthen your own abilities in building everything you can imagine.

1. Start Vague

Before jumping into implementation, give yourself space and time to imagine the different ways the thing you want to build can be approached.

As yourself questions like: Is it an app or on the web? Is it for yourself or for others?

In my opinion, by think about what you want to achieve abstractly before considering and kind of technical implementation helps you consider a broader array of possible solutions, instead of leading you down familiar pathways.

Embrace the neutralities afforded by LLMs to approach the problem beyond your known capabilities.

Also make sure to discuss your proposed project to your favorite LLM and spend a few minutes debating possible high-level directions for implementation.

Once you have this, jump into rapid prototyping tools like V0 and Replit to build your first screens.

Use this to create a high-level roadmap of what you want to achieve: which basic functions, interfaces, data sets, inputs and outputs will be required?

2. Iterate Small (Chunking)

Once you have a rough idea of what to achieve, you need to build.

For everything you want implement with the help of LLMs, try approaching it atomically. I other words: what is the smallest possible implementation detail that you need to build, and how can you make it even smaller?

Instead asking your AI to “build an app which allows the user to achieve a function by inputting certain data and processing it in a specific way and then outputting these somewhere” you might start by “building an web app in React to be hosted only on my own machine, configured to run and host a simple page app”.

Once you have that working, move on to the next step.

Caveat: context windows and model capabilities are quickly changing this, so maybe also lean into things like O1 or Gemini for larger requests.

For each chunk of work, make sure you commit the code, to make sure you can easily go back to that state. (You will need it).

When something doesn’t work, try different models. Claude, Gemini, GPT each have different strengths and problem-solving approaches. You can get surprisingly far by engaging with progressively querying different models. Spend those credits!

If you are new, start by building single-page apps before moving to multiple files and directories.

3. Pareto Principle

In any given moment working intimately side by side with an LLM, I feel you need to know approximately 20% of what is being done while the LLM provides the missing 80%.

You clearly don’t need to understand everything, but if you are completely clueless about what your code is doing, it becomes very difficult to ask good questions.

Maybe your balance will be more like 50/50 or even 90/10 - it doesn’t matter. What’s important if you need to consider every interaction from a position of balance. We quickly forget how many things we are bad at.

Instead of avoiding your weak spots, lean into them. Don’t over-exert your expertise. You are probably better than the AI on the stuff you are already good at, so use that lightly and instead use every opportunity to learn something new and build a different capability (in your app and beyond).

4. The Last 1%

Even if you figure out 99% of your app, the last 1% might require help from seasoned coders.

You will probably run into blind alleys which even frontier models have a difficult time backing out of. When this happens, share more context with your AI, such as seemingly unrelated files and frameworks that might not be part of the immediate thing being worked on.

Make sure to share your progress with your mode able programmer friends to compare notes and create a shared space of understanding to answer questions and share learnings.

5. Lean In

AI is your infinitely patient friend when answering questions about anything – especially itself.

Use LLMs for everything you need help with: design, code, creativity, opinions, etc. Just ask away!

Troubleshoot everything: use GPT to further your understanding of the field and what is being created.

6. Try New Tools

Don’t be afraid to spend time in unfamiliar apps.

Full-stack simple apps: Claude Artefacts & ChatGPT Canvas.

Prototyping: Vercel V0, Replit, Codeium, TLDraw MakeReal.

If you have other tips of input - please share them with me. Would be fun to organize this as a workshop. Let me know if you’d be interested.

What triggered this post?

Last week I had the opportunity to hang out with brilliant minds at THNK planning their next and future initiatives around AI, and it occurred to me how much our capacity to prototype & build have changed in the past year.

Instead of requiring weeks of experimentation, we can build things live now. We should not replace speed with accuracy, but it helps to quickly put ideas into action and try our own skills at literally just building things.

Please share this edition with everyone you think needs a push to level up their AI building skills - and don’t be afraid to like the post!

Until next week,

MZ

PS. Thanks Chris for asking the questions that led to this writeup and Laly 🤍 for the image suggestion!

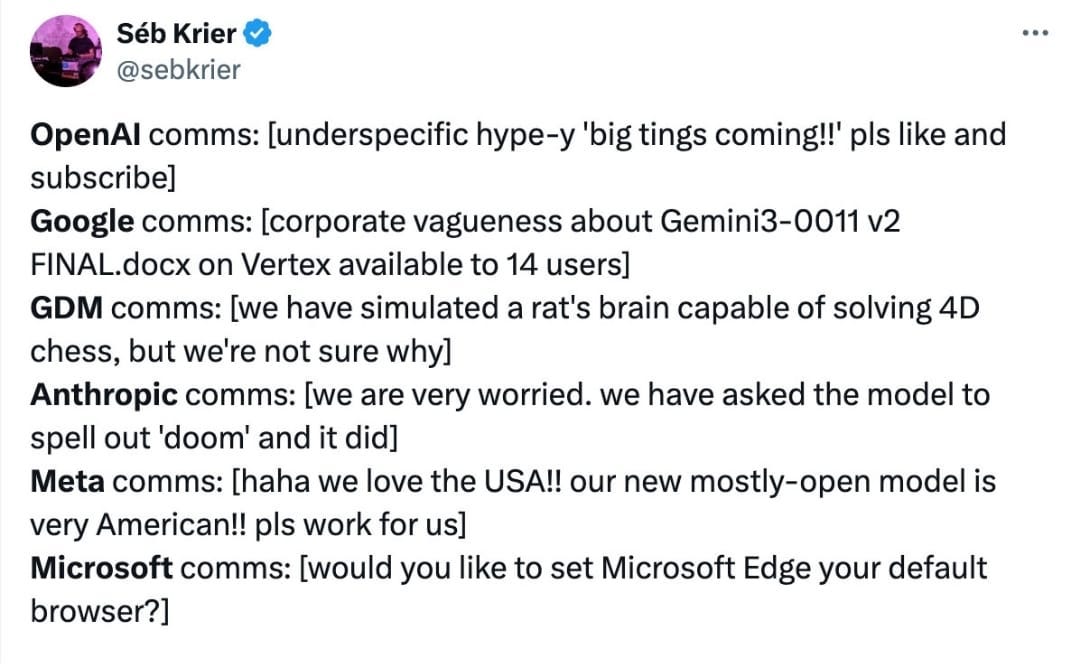

Breaking Down GPT-O1 Pro (17 min)

Excellent analysis on O1 and the $200 per month O1 Pro, highlighting improved reasoning and reliability but questioning their value given limited advances and safety concerns. In short: the models show potential but fall short of being transformative. Thanks Chris!

Dreams, Creativity, and the AI Paradox (1h10)

Kenneth Stanley raises existential questions about a future where AI surpasses human capabilities, potentially diminishing human dreams and self-expression. While AI can liberate time and amplify creativity, it challenges the value of human effort in a world where machines might outperform us in every way.

Self-expression is an essential part of human experience, and we can’t lose that to consumption or automation.

Sam Altman doing his thing (35 min)

TIL: ChatGPT has 300M MAU and receives 1B messages per day.

Reinforcement Fine-tuning (20 min)

From OpenAI’s 12 days of shipmas: Reinforcement fine-tuning introduces a new method for tailoring AI models to excel in specialized domains. By enabling models to adapt using minimal data, this approach offers promising applications in areas like healthcare and legal analysis, where precision and reasoning are critical.

AWS Innovations for 2025 (1h20)

Amazon is a boring but interesting company, which essentially bootstrapped the cloud computing industry with AWS, so worth paying attention to.

"Trainium2’s architecture addresses specific AI needs, minimizing memory bottlenecks and optimizing performance through its systolic array design." – Peter DeSantis

The 70% problem

If the intro made sense to you, don’t miss this article by Andy Osmani on his own approach for AI-assisted coding.

Quick Links

Impressive image-to-3D by Worldlabs.

OpenAI seeks to unlock investment by ditching ‘AGI’ clause with Microsoft (FT)

Exciting new model from Google DeepMind: Genie 2, a foundation world model.

This is interesting: a pair of students created an invisible desktop application to help software developers with technical interviews. I bet we'll see this for other categories and evaluations soon.

OpenAI’s Sora 2 is apparently being previewed with remarkable character consistency.

I spent 8 hours testing o1 Pro ($200) vs Claude Sonnet 3.5 ($20) - Here's what nobody tells you about the real-world performance difference (excellent thread on /r/OpenAI)

AI Safety Testers: OpenAI's New o1 Covertly Schemed to Avoid Being Shut Down (harrowing Slashdot thread). Thanks Ricky!

Interesting approach for diffusion generated imagery by Pentagram for the US Federal Government.

6 AI trends for 2025

AI models will become more capable and useful

Agents will change the shape of work

AI companions will support you in your everyday life

AI will become more resource‑efficient over time

Measurement and customization will be keys to building AI responsibly

AI will accelerate scientific breakthroughs

If Artificial Insights makes sense to you, please help us out by:

📧 Subscribing to the weekly newsletter on Substack.

💬 Joining our WhatsApp group.

📥 Following the weekly newsletter on LinkedIn.

🦄 Sharing the newsletter on your socials.

Artificial Insights is written by Michell Zappa, CEO and founder of Envisioning, a technology research institute.

You are receiving this newsletter because you signed up on envisioning.io or Substack.