Robots are parts of corporations that we have stuck inside our houses (005)

Your weekly review of our transition toward AGI and computational creativity.

Not a week goes by without a major corporation announcing groundbreaking developments in their AI approach. For better or worse, we are experiencing a transition of business models and value generation which relies on increased automation against a decreasing surface area of attention. Our ability to absorb information shows no signs of increasing together with the glut of data these systems generate, leaving more people short on energy to even try keeping up. I’m no exception, and believe the only sensible way forward is building systems that help us nurture our underlying humanity, imagination and judgement. This week’s links hope to capture that sentiment through the words (and memes) of others.

MZ

Do you worry about mistreating robots? 🐄 (30 min)

I had the opportunity of watching professor Joanna Bryson speak live in Lisbon last week about developing safe and responsible autonomous systems. While I haven’t found a recording of last week’s session, this recent recording of Joanna lecturing in Berlin comes very close, addressing the perils of our recomendation systems and the risk of systemic negligence from our governing classes. I particularly agree with Joanna’s take that organizations should be liable to not discard decision data as part of their due diligence. AI is not something to be blindly trusted, but rather something that needs to be continuously audited and held accountable.

Robots are parts of corporations that we have stuck inside our houses.

“The future of AI and more” 🕰️ (50 min)

Surprisingly earnest conversation between Patrick Collison and Sam Altman. I’m impressed with the openness of the conversation which touches on actual personal use of LLMs, the role of China, the potential of global coordination for AI safety and the importance (and difficulty) of funding long-term projects. Bonus points if you don’t mind Altman’s vocal fry.

Onboarding your AI assistant 👋 (20 min)

Excellent framework for navigating different kinds of AI Augmentation by GPT hero and Wharton professor Ethan Mollick. Especially like the framing around Centaurs and other kinds of task categories. Don’t miss Mollick’s Substack or Twitter feed for cutting-edge insights into LLM and prompt explorations. Via Gustavo.

Are emergent abilities of LLMs a mirage? 🏝️ (16 pages)

Paper from Stanford researchers Rylan Schaeffer, Brando Miranda and Sanmi Koyejo presenting strong supporting evidence that emergent abilities may not be a fundamental property of scaling AI models. Instead, LLMs only display such abilities under narrowly defined circumstances, which are not always replicable. Via Caroline.

What controls which abilities will emerge? What controls when abilities will emerge? How can we make desirable abilities emerge faster, and ensure undesirable abilities never emerge? These questions are especially pertinent to AI safety and alignment, as emergent abilities forewarn that larger models might one day, without warning, acquire undesired mastery over dangerous capabilities.

Digital immortality and the future of work ☠️ (30 min)

Insightful interview with Yuval Noah Harari and Yann Le Cun in Le Point about the emergent abilities of computers. Via Iolanda.

Once you can feel pleasure, you have consciousness. Self-consciousness is something else, it's the ability to reflect on the fact that you feel emotion. And we have self-consciousness only a very small part of the time. Based on this definition, I think it's totally possible that machines will gain consciousness or have feelings. But it is not inevitable.

How Generative AI Changes Creativity 🎨 (35 min)

HBR editor in chief Adi Ignatius interviews video artists Don Allen and innovation researchers Jacqueline Ng Lane and David De Cremer. The discussion centers around the benefits of quick iteration and skipping steps in the creative process, and how it changes the nature of collaboration by eliminating some kinds and creating others. They touch on changing expectations and how we quickly change what is impressive, as well as the importance of showing your work as part of the process. The group also discusses how to incorporate generative AI into creative workflows, not replacing human input, to encourage serendipity and group discussion. They also touch on the nature of authenticity and increased efficiency across the board, and how focusing on doing what we enjoy can lead to greater work (and life) satisfaction. I liked the shoutout about Wonder Studio in particular which will be featured in an upcoming edition of this newsletter.

Emerging Vocabulary

Overhang

The difference between the minimum amount of computation required to achieve a particular level of performance and the actual amount of computation used in training a model. This extra computation can result in a model that is more accurate or performs better than it needs to for a given task. Overhang is an important concept in AI research as it can help researchers understand the limits and potential of different machine learning algorithms and architectures.

Project Showcase

Phind.com

Phind is a search engine that simply tells users what the answer is. Optimized for developers and technical questions, Phind instantly answers questions with detailed explanations and relevant code snippets from the web.

SponCon

Envisioning Sandbox

Envisioning is developing a platform for organizing workshops using artificial intelligence to augment your creative flow. To demonstrate our approach, we are hosting a public demo next week to present the platform and share early access links.

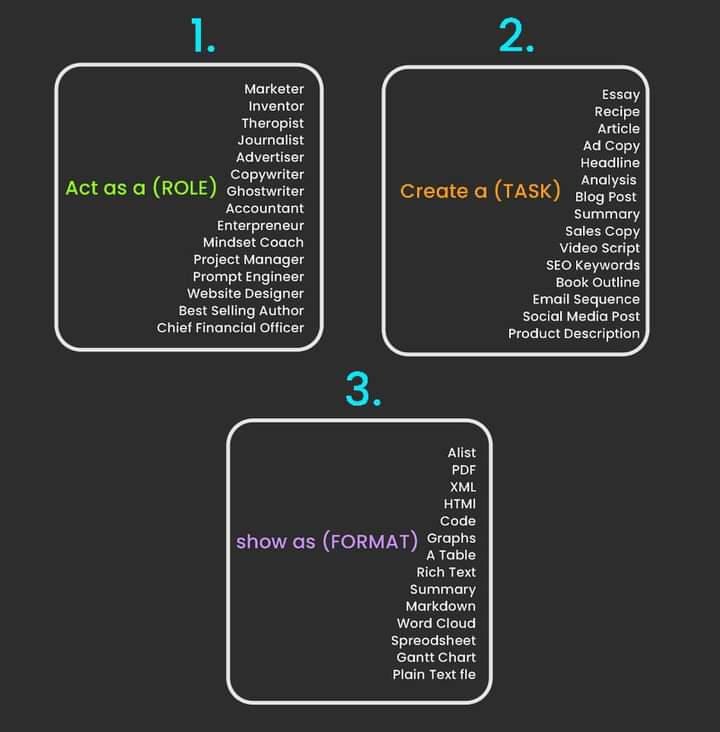

Prompt Techniques

Technology & Culture

From fireplaces to fascination

By Luma Eldin: The control of fire was a crucial technological leap that deeply affected human culture and society. It sparked key cultural transformations, influencing the development of civilization. Cooking with fire simplified digestion and added pleasure to eating, turning it from a survival function into a social activity. Fireplaces became communal hubs, promoting social cohesion, possibly nurturing complex language and expression. However, despite its benefits, fire still poses significant risks today, from property damage and air pollution to carbon emissions. Similarly, as we explore artificial intelligence, we must balance its risks and rewards, keeping in mind the potential cultural impact. This technology could both augment and test our established norms and traditions. AI holds great promise for catalyzing innovation across various cultural fields, including music, fashion, and art. However, the uncertainties linked to AI's societal, ethical, and privacy impacts necessitate prudent management of this powerful technology.

If Artificial Insights makes sense to you, please help me out by:

Subscribe to the weekly newsletter on Substack.

Forward this issue to colleagues and friends wanting to learn about AGI.

Share the newsletter on your socials.

Comment with your favorite talks and thinkers.

Artificial Insights is written by Michell Zappa, CEO and founder of Envisioning, a technology research institute.

You are receiving this newsletter because you signed up on envisioning.io.