Minds among us, or: exploring non-human intelligence (012)

Welcome to Artificial Insights: your weekly review of how to better collaborate with AI.

Happy Monday and welcome to another edition of Artificial Insights. Thanks to everyone who shared suggested names and links to thinkers and researchers I should follow. In such a broad and fast-moving field as artificial intelligence, it is a constant challenge to even decide who to read and what to focus on. In publishing this newsletter I hope to help you navigate and learn from some of the voices shaping our (inevitable) future of more and different kinds of intelligence.

This week features a few short talks and articles exploring the implications and possible consequences of the explosion in intelligence we are experiencing. I have a strong suspicion that we will learn a lot more about non-human intelligences in the near future – there are probably many more kinds of intelligence out there than our anthropocentric worldview have us consider. It would be arrogant and short-sighted to imagine our current selves at the peak of intellectual evolution – and it arguably wise to learn about possible kinds of intelligence lurking around the corner.

MZ

Will Superintelligent AI End the World? 💥

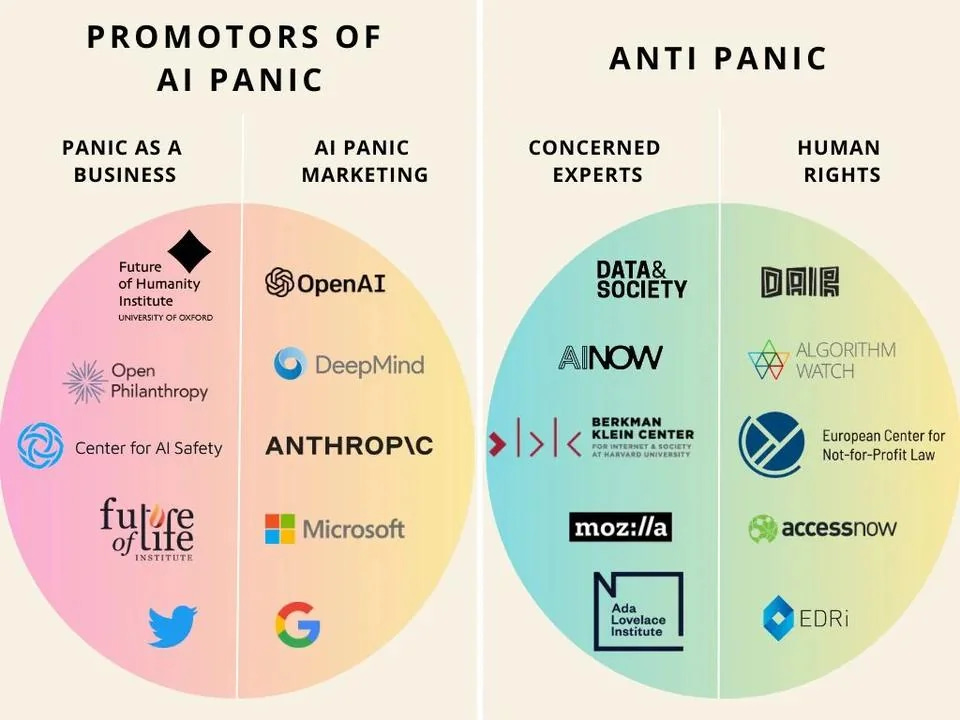

Eliezer Yudkowsky discusses the potential risks of creating a superintelligent AI that surpasses human understanding. Without a serious approach to aligning superintelligence with human values, conflict with an AI entity that does not share our values could pose a significant threat to humanity. Yudkowsky does not advocate violence as a means to address the issue but acknowledges the push for an international reckoning on managing the risks associated with AI.

Disrupting Data Injustice 🔬

Renée Cummings discusses the challenges and ethical considerations associated with the use of data and AI. She highlights the potential for data to perpetuate biases and injustices, particularly in marginalized communities, and emphasizes the importance of responsible and ethical technology deployment. Cummings calls for greater transparency, diversity, and interdisciplinary collaboration in the design and use of data science tools.

Demis Harabis on Ezra Klein ♟️

Co-founder of Google DeepMind and Isomorphic Labs, Demis Harabis shares insight into the cutting edge of AI research and his experience working in the field with Ezra Klein. Dense and technical conversation - highly worth a listen, especially to understand how AI might affect research and help us address critical global challenges.

Could an Orca Give a TED Talk? 🐳

Karen Bakker discusses how scientists are using artificial intelligence to decode non-human communication and gain a deeper understanding of the intricate communication networks in the non-human world. Fascinating how bats and orcas, whose vocalizations have been decoded using AI, reveal complex communication systems and dialects.

UN Security Council meets for first time on AI risks 🇺🇳

Short report by BBC News on how the UN Security Council is starting to discuss AI risk, international cooperation and regulation. AI displays potential positive and negative uses, all of which need to be considered and resolved for progress to be possible.

Can AIs suffer? 🥽

Yuval Noah Harari delves into the power of storytelling and the impact it has on shaping human beliefs and history. He emphasizes that fictional stories and human-created rules, despite being products of imagination, hold significant consequences in society. He warns of the allure of simple and attractive narratives and the need to critically examine them to understand their underlying implications.

We are living in a world of illusions created by an alien intelligence that we don't understand but which understands us, is a form of spiritual enslavement which we can't break out of.

Emerging Vocabulary

Cross Validation

Technique for evaluating the performance of a model on a dataset. It is essential because it provides a more robust estimate of how well a model will perform when used to make predictions on unseen data. The basic idea of cross validation is to divide the available data into two sets: the training set and the validation set. The training set is used to fit the model's parameters, while the validation set is used to evaluate the model's performance. This process is repeated multiple times, each time using different portions of the data as the validation set, and then averaging the evaluation results across these iterations. By doing so, cross validation provides a more reliable estimate of a model's generalization performance, allowing for a more informed decision about which model to select.

View all emerging vocabulary entries →

If Artificial Insights makes sense to you, please help us out by:

Subscribing to the weekly newsletter on Substack.

Following the weekly newsletter on LinkedIn.

Forwarding this issue to colleagues and friends wanting to learn about AGI.

Sharing the newsletter on your socials.

Commenting with your favorite talks and thinkers.

Artificial Insights is written by Michell Zappa, CEO and founder of Envisioning, a technology research institute.

You are receiving this newsletter because you signed up on envisioning.io.

Michel, on machine validation. I now have our robots validating themselves and each other, pointing out potential biases, fake news, misinformation. I can then correct these, if they occur, which is now, near zero. Then there is our own human reality check from ourselves, and our expert panel. The results have been excellent. Plus client feedback has been excellent too with no complaints thus far. On the contrary, our experts and clients say a universal Wow! to the machines findings and marvel at how much the AIs know about their business.