It’s Only Going To Get Weirder (097)

Vibing our way to the singularity.

Happy Monday and welcome to your weekly resort experience at the lotus of AI.

This week’s issue is brought to you from a surprisingly sunny London where I have spent the last few days being a tourist, book shopper and urban explorer (without necessarily relying on AI). London is the world’s second most important city for nurturing the technologies leading us to AGI, and I am relieved the Silicon Valley ethos of moving fast & breaking things never took root in the culture of DeepMind and their ilk.

Where and how AI companies develop matters greatly, as their cultures inevitably reflect the broader contexts in which they emerge. Only the Bay Area could have produced today’s foundational technologies, with its particular blend of entrepreneurship, risk tolerance, and public-academic synergy. The UK (and Europe more broadly) shares some of these traits, but still struggles to manufacture technological success at anything close to the scale or relevance of the U.S.

A diverse set of AI producers is essential. Different strategies will play out unpredictably in the coming decades, and avoiding an AI monoculture is critical for countless reasons. While I personally enjoy the chirpy, do-gooder vibes of Californian AIs like Claude and ChatGPT, other values and capabilities must be explored if we’re to maximize the potential of our future synthetic underlings.

While the UK may lag in scale, it’s taking a quietly assertive lead in shaping the norms of responsible AI, hosting the world’s first AI Safety Institute and apparently embedding ethical governance across its public sector. In contrast to the Valley’s relentless velocity, this more measured approach may prove foundational as we navigate the messy reality of deploying AI systems in society.

How this unfolds is anybody’s guess, but I am thrilled to be on this ride with you.

Until next week,

MZ

The best links from the community

Iolanda: Anthropic just announced real time web search in Claude.

Richard: Meta AI has arrived in Europe and the UK. Learn new things, brainstorm ideas, and ask questions, from right inside our app.

Greg: This touched nerves yesterday, but I’m a longtime fanboi of Simon Wardley and his Wardley Maps for how they provide great tools for rethinking strategy.

Chris: A turn by turn commentary on the race to the finish line. But who’s that taking an illegal shortcut…

Reinier: OpenAI to start testing ChatGPT connectors for Google Drive and Slack.

Superb examples of using MidJourney for creating images of things where photos would be infeasible or impossible.

Sam Altman on the future of AI (45 min)

Strategies for adapting to a changing world and our hopes for technology that enhances human progress while maintaining human values. Thanks Bas!

The Sovereignty of Truth (1h40)

Spectacular lecture from Dr. Iain McGilchrist. Only marginally about AI, but a tour de force about the importance of truth and beauty. The second part is here.

Controlling powerful AI (50 min)

Anthropic researchers Ethan Perez, Joe Benton, and Akbir Khan discuss AI control—an approach to managing the risks of advanced AI systems. They discuss real-world evaluations showing how humans struggle to detect deceptive AI, the three major threat models researchers are working to mitigate, and the overall idea of controlling highly-capable AI systems whose goals may differ from our own.

Navigating AGI Discourse in Foresight

Excellent study about the emergence of AGI and its effect on foresight by the Copenhagen Institute for Future Studies. Worth noting that Ray Kurzweil called this inflection point a singularity in the sense that we cannot see past its event horizon from the present. I suppose this has always been the case, but AI is definitely fueling accelerating change. Thanks Inês!

For foresight practitioners, the challenge is not just to engage with AGI as a technological possibility, but to do so in a way that is both imaginative and strategically relevant.

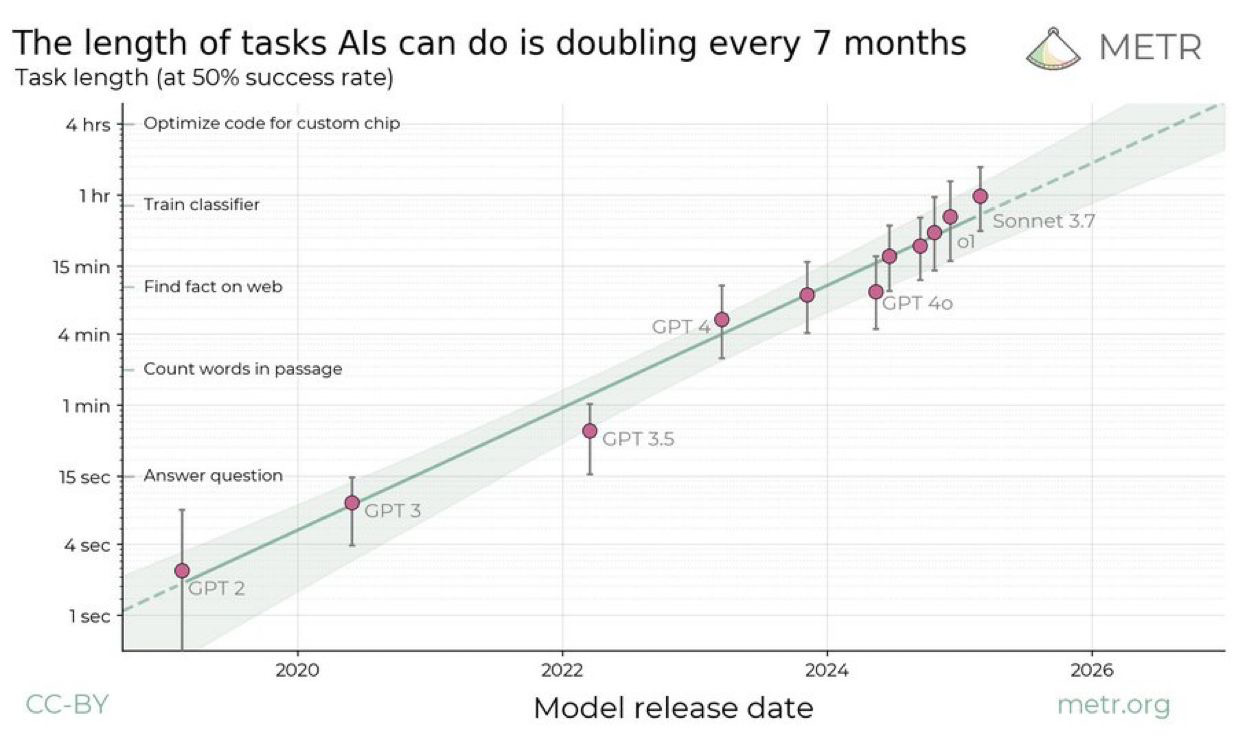

Measuring AI Ability to Complete Long Tasks

To quantify the capabilities of AI systems in terms of human capabilities, we propose a new metric: 50%-task-completion time horizon. This is the time humans typically take to complete tasks that AI models can complete with 50% success rate.

length of tasks that AI can complete at 50% success rate doubles every 7 months

estimates that AI's will be able to complete a software engineering task that would take a human engineer a month within 5 years

models today are limited by complexity of real-world "messy" tasks, but getting better

The hyperobject at the end of time (60 min)

This week’s subject line stems from one of my favorite philosophers and psychonauts Terence McKenna from this evergreen interview 25 years ago. Terence was a visionary on a whole different wavelength and could the future (our present) with more clarity than most people alive today. Turn on, tune in & drop out.

If Artificial Insights makes sense to you, please help us out by:

📧 Subscribing to the weekly newsletter on Substack.

💬 Joining our WhatsApp group.

📥 Following the weekly newsletter on LinkedIn.

🦄 Sharing the newsletter on your socials.

Artificial Insights is written by Michell Zappa, CEO and founder of Envisioning, a technology research institute.

Really appreciate reading this about the UK and London!