Insufficient Data for a Meaningful Answer (124)

Welcome back to Artificial Insights, where the ongoing experiment in thinking out loud with machines keeps unfolding in public.

For me, this newsletter is a way to organize my thoughts around the hyperobject that is AI. It’s a phenomenon far too complex for any single person to grasp, and publishing my ideas forces me to articulate something meaningful about it. Even though I spend most days working with language models – mainly for coding –stepping back to reflect on the conditions shaping these capabilities feels necessary.

For example, I spent most of the past month developing a scalable approach for Envisioning to expand its public research in the shape of content hubs. We have long had a practice of releasing as much of our research as possible for public use. In developing Signals, we have learned how to leverage the output of multiple models in order to produce more credible results.

Having experimented with correlating Star Trek technologies to their real-life counterpart with Subspace Index, and identifying plausible exotic and undisclosed technologies with Xenotech, we recently rolled out another dozen free industry-specific reports, each covering 50+ relevant emerging technologies likely to shape its future.

The easiest way of diving into the research is on envisioning.com where we just rolled out a simple interaction where you enter the industry or sector you’re working in, and the widget returns a selection of relevant technologies you might want to learn about. If they are relevant, you can further save cards as favorites, and then organize your cards on a canvas, helping you decide how to act with regard to each technology. Would appreciate your feedback on this!

—

Below is this week’s issue of my ongoing ‘field notes’ for using AI.

If thinking refines ideas, creating brings them into form, building turns form into function, and deciding decides what deserves our attention, then organizing is the discipline that keeps the whole system coherent. It is how fragments become memory, how context survives long projects, and how the work stays searchable by a future self that no longer remembers making it.

This is the fifth chapter in the series of field notes documenting how I actually collaborate with AI. I have been asking AI to interpret how we interact, looking for patterns and repeatable lessons. Organizing with the help of language models has been a recurring theme. It is less glamorous than launching something new, but it is the part that prevents drift.

How I organize with AI:

Draft hierarchy detection: Unordered notes become outlines when I ask for the “natural hierarchy” hiding inside them. The model suggests section headers, sequences, and missing bridges so drafts can graduate into finished forms.

Compressed research ramps: Before diving into a fresh domain, I have AI help create an overview into a briefing with terminology, debates, and blind spots. It turns curiosity into orientation without sacrificing skepticism.

Conversation synthesis: After interviews or workshops, I feed transcripts through prompts that surface tensions, contradictions, and outliers. Organizing isn’t just summarizing, it’s deciding which signals deserve another look.

Naming as diagnosis: Describing a project and asking AI for naming directions reveals intent. If the suggested names all feel off, it is usually because the strategy is vague, not because the AI is wrong.

Learning arcs and questionnaires: When designing curricula or forms, I use AI to order concepts the way people actually absorb them and to phrase questions that gather meaning instead of noise.

Continuity checkpoints: On long projects, I periodically ask AI to reconstruct the narrative: what we decided, why it mattered, what signals would trigger a reconsideration. Organizing is partly about restoring continuity for the future version of me who forgot.

—

Until next week,

MZ

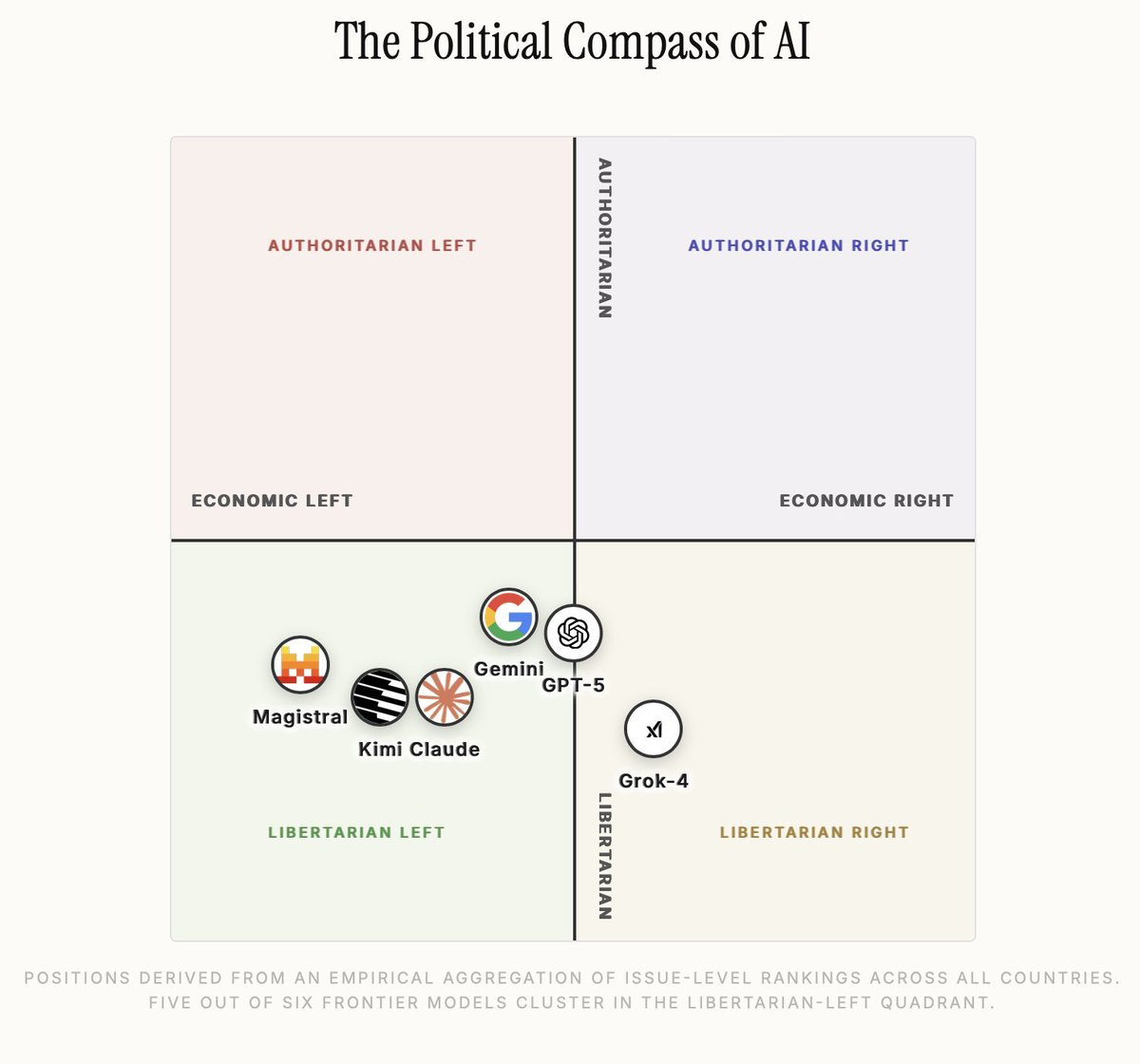

Fascinating study.

Sprawling interview about AI’s effect on labor and employability with Emad Mostaque (90 min)

Ilya on Dwarkesh (90 min)

If you haven’t watched The Thinking Game, the documentary about DeepMind is now free on YouTube. Don’t miss it. 90 min.

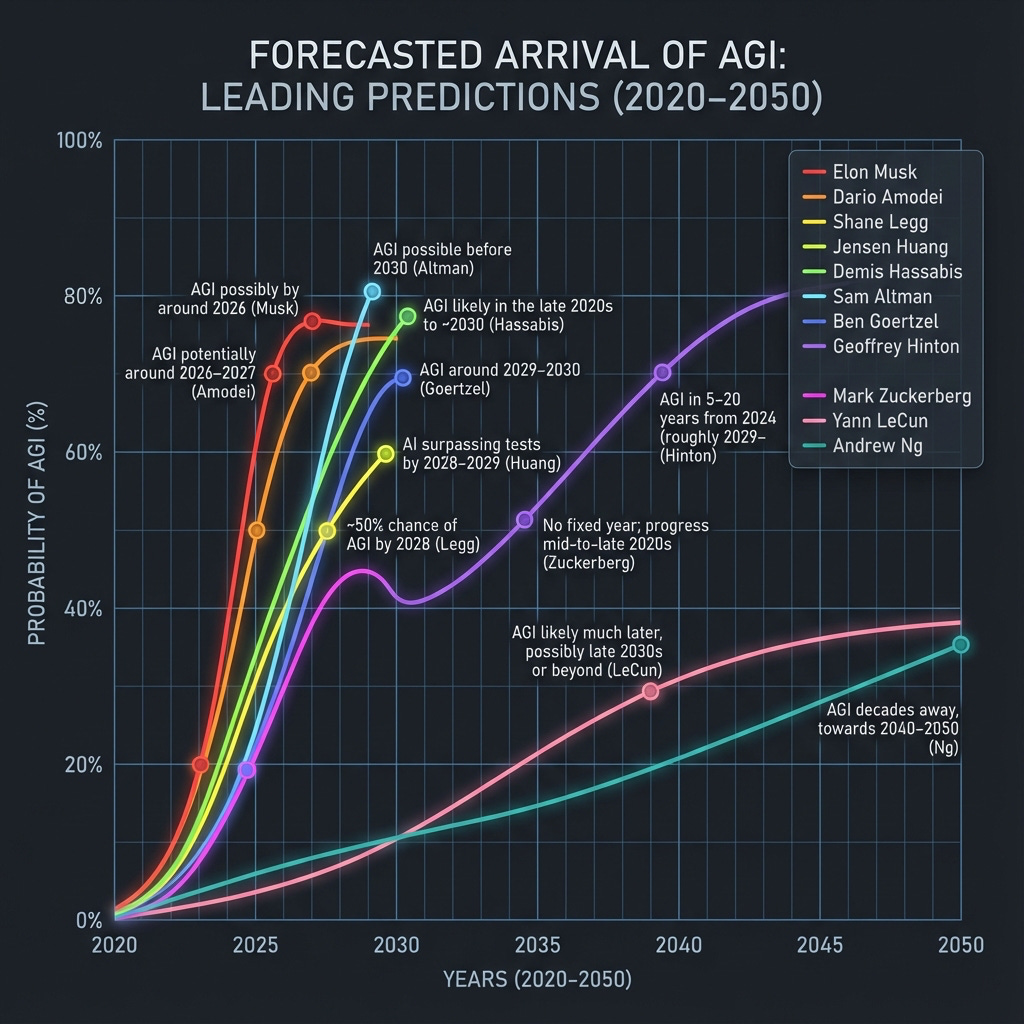

Not sure how useful these are. Nano Banana Pro generated infographics about when we’ll reach AGI.

If Artificial Insights makes sense to you, please help us out by:

📧 Subscribing to the weekly newsletter on Substack.

💬 Joining our WhatsApp group.

📥 Following the weekly newsletter on LinkedIn.

🦄 Sharing the newsletter on your socials.

Artificial Insights is written by Michell Zappa, CEO and founder of Envisioning, a technology research institute.

Thanks for reading Artificial Insights! Subscribe for free to receive new posts and support our work.