Futures Collapse (105)

Why multiple AIs outperform single ones.

It is difficult to picture how different AIs display biases in their output data.

We assume our models to be neutral, yet it’s trivial to pinpoint examples of forced behavior, like Golden Gate Claude, the purpose-built variant of Anthropic’s assistant that smuggled San Francisco’s iconic bridge 🌉 into almost every reply, regardless of topic. Biases go unnoticed.

Spotting these traits in models is challenging, and we have only recently started developing the skills necessary to notice biases when we interact with AIs.

Last week Grok, an AI model created by xAI, began shoe-horning references to a conspiratorial “white genocide” in South Africa into unrelated conversations. The episode, blamed on a rogue prompt injection, is a textbook reminder that a model’s worldview can be bent—sometimes clumsily—by anyone with access to its levers.

Such mishaps are not mere curiosities.

LLMs are trained on oceans of text, but each ocean is charted differently.

OpenAI’s GPT is steeped in Anglo-American discourse; Google’s Gemini is tethered to the live web; Anthropic’s Claude is tuned to err on the side of safety; Mistral’s models reflects a more European palette; Grok is marinated in the brash vernacular of X; Meta’s Llama rests on open-source ideals.

Ask the same question and their answers diverge like newspaper editorials. In that divergence lie both risk and opportunity. Sensible strategist therefore treat models like any other asset: diversify, compare, rebalance.

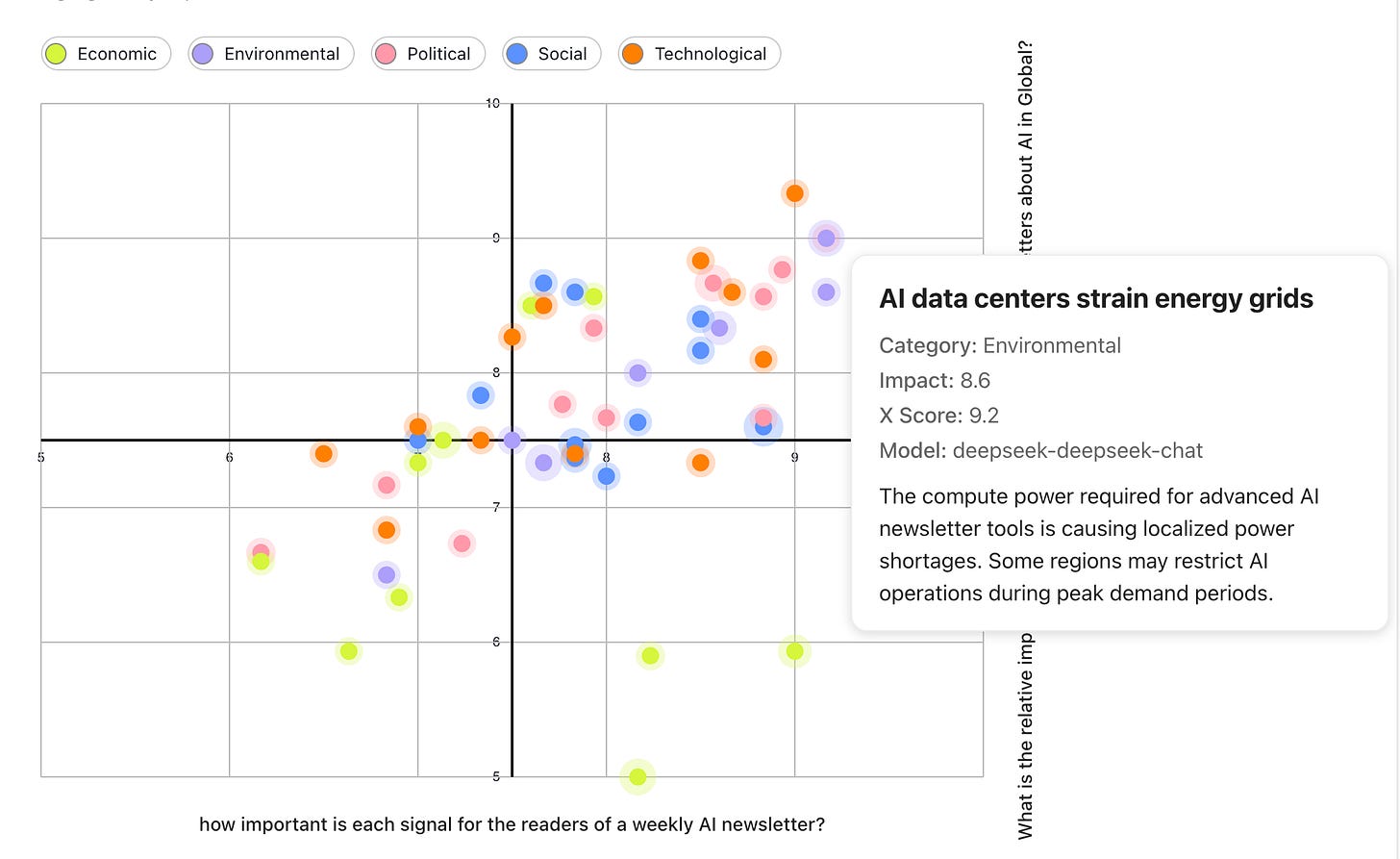

Signals, our ensemble AI research tool, does just that: running identical prompts through multiple engines, stripping out duplicates, and identifying coherence between their answers.

Agreement across models raises confidence; conflict highlights implicit assumptions. The result is not a single synthetic truth but a spectrum of possibilities that can be weighed by your judgment.

For foresight and innovation teams, the question is not how to police the models but how to press them into service today.

Signals lets you assemble an overview of multiple AIs in a few clicks: choose a scan type, input your organization, region and hit Generate.

Within minutes the app collates, de-duplicates and visualizes the data, handing you a briefing of emerging signals ready for scenario work, design sprints or that afternoon’s strategy meeting.

If foresight is the art of thinking several futures at once, nothing aids the craft more than a chorus of mutually biased AIs. The trick is to keep them arguing long enough for the truth, or something close to it, to emerge.

We are rolling out access to our platform to foresight and innovation practitioners through a series of quick demos. The next one is happening next Thursday May 29. Join us for a showcase of the platform, the reasoning behind it, and to receive a custom scan for any organization or industry you want insight on.

Until next week,

MZ

Insightful interview with Altman (30 min)

I mean, in some sense, I think the Platonic ideal state is a very tiny reasoning model with a trillion tokens of context that you put your whole life into. The model never retrains; the weights never customize. But that thing can, like, reason across your whole context and do it efficiently. And every conversation you’ve ever had in your life, every book you’ve ever read, every email you’ve ever read—everything you’ve ever looked at—is in there, plus all your data from other sources. And, you know, your life just keeps appending to the context, and your company just does the same thing for all your company’s data. We can’t get there today, but I think of anything else as a compromise off that Platonic ideal, and that is how I would eventually—I hope—we do customization.

Act like a Cold War era Russian Olympic Judge

Excellent interview with Nicholas Thompson of The Atlantic. This whole conversation is gold. Don't miss it.

AGI by 2030? (60 min)

Amazing overview by Benjamin Todd. Article version.

Deep Research (9 min)

Continuing the excellent series of talks from Sequoia, Isa Fulford from OpenAI explains their Deep Research product.

Quick Links from the community

Semantic calculator: a smart way of learning about embeddings and word vectors.

This is the equivalent of doing search engine optimization in the early 2000s before everyone else caught on. Prepare your infra for agents. (WSJ)

If Artificial Insights makes sense to you, please help us out by:

📧 Subscribing to the weekly newsletter on Substack.

💬 Joining our WhatsApp group.

📥 Following the weekly newsletter on LinkedIn.

🦄 Sharing the newsletter on your socials.

Artificial Insights is written by Michell Zappa, CEO and founder of Envisioning, a technology research institute.

Thank you for the fascinating newsletter article about LLMs output variant. Really appreciated learning this. Carrie