Complete Accuracy Collapse (108)

You are now closer to 2050 than the year 2000.

Welcome to your weekly download of the AI memes that matter.

Having spent all of last week in London for the inaugural European SXSW conference, I am left wondering what role if any events play in my own learning journey. I’ve never been a fan of large conferences – I find them overwhelming and shallow, much preferring a continuous stream of YouTube lectures over any physical venue. Focused workshops and dedicated gatherings can be much more fruitful, but if the value proposition of an event is leaning back and listening, the whole physical aspect of getting in lines and hustling for decent seats feels incredibly cumbersome.

SXSW was no exception – the content was thin, session titles often felt like clickbait, promising deep remarks, while the panels I witnessed barely scratched the surface of interestingness. The event attracted loads of high-profile speakers and panelists, yet the rushed format catered more towards quantity than quality. Like a cheap buffet. I am certain other panels were amazing and that I probably had bad luck from choosing topics I’m too well-informed about. Next year I might venture further outside my comfort zone and lean into subjects other than AI in the hopes of learning something new!

My highlights were undoubtedly hanging out with brilliant minds both living in London and visiting for the week. AI & AGI was on everyone’s mind, and I had the absolute privilege of hosting an innovation dinner with Henry Coutinho-Mason and HUMANS Intelligence where everyone invited explored what makes them non-obvious. Thematic work dinners are a rare pleasure and I found the participants both interesting and interested in the issues at hand. Thanks to all readers who joined – new and old – I am certain this is a format we’ll keep exploring and iterating on, on this world tour.

In fact, if you are in São Paulo two weeks from now (on June 24) you should join our breakfast meeting with our partners at Cappra Institute and Echos for a similar experience with fellow innovation experts and AI-inclined futurists. Sign up on Luma quickly as our seats are limited.

Until next week,

MZ

Intelligence Collapse

A recent research paper by Apple makes some pretty bold claims about LLMs not actually reasoning and how they have some pretty steep limitations when it comes to thinking claims. Seems in line with Gary Marcus and to some degree Yann LeCun.

Defining AGI & designing AI-native organization (60 min)

Wharton business school professor Ethan Mollick is the smartest person in AI today so I highly recommend listening his entire interview. Ethan's information density is staggering and full of hilarious takes.

In short: it's super difficult to hire people who are great at AI because the context varies so much. New rules apply now when we can prototype so easily & incentives are invaluable when building something new in the organization. Clarity of vision matters more than ever.

Entrepreneurship is all about being very bad at many things but really really good at one thing.

Here is an o3 summary of the video transcript focused on key teachings.

Strategic fork: Either cut ~25 % head-count to pocket efficiency gains, or “go Guinness”—hire aggressively and use AI to capture new markets before rivals do.

AI-native org design: Scrap legacy structures built for human-only workflows; rebuild around human-agent teams where software handles low-skill tasks and people focus on judgment, taste, and system steering.

Capability pipeline: AI boosts juniors but multiplies true experts 10-100×; if automation replaces entry-level grunt work you must create explicit training paths or the future expert pool collapses.

Leadership + Crowd + Lab model: Top execs set the AI vision, everyone experiments (“crowd”), and the best internal tinkerers form a central lab—far better than hiring an external Chief AI Officer.

Maximalist experimentation: Skip tiny PoCs; let agents attack entire end-to-end processes, benchmark results against business KPIs you care about, and iterate fast—models improve quicker than your IT roadmaps.

Aligned incentives: Pay bounties, rewrite job specs, or mandate “AI first” project drafts so staff reveal and scale their automations instead of hiding them for fear of layoffs.

Agent-native interfaces: Embed persistent agents that track context across docs, meetings, and systems; use cheap models for routine chatter and pay for heavyweight calls only when stakes demand deeper reasoning.

AI Signals The Death Of The Author

Excellent essay in Noema (via Leticia).

The meaning of a piece of writing does not depend on the identity of the author, even if the author is not human.

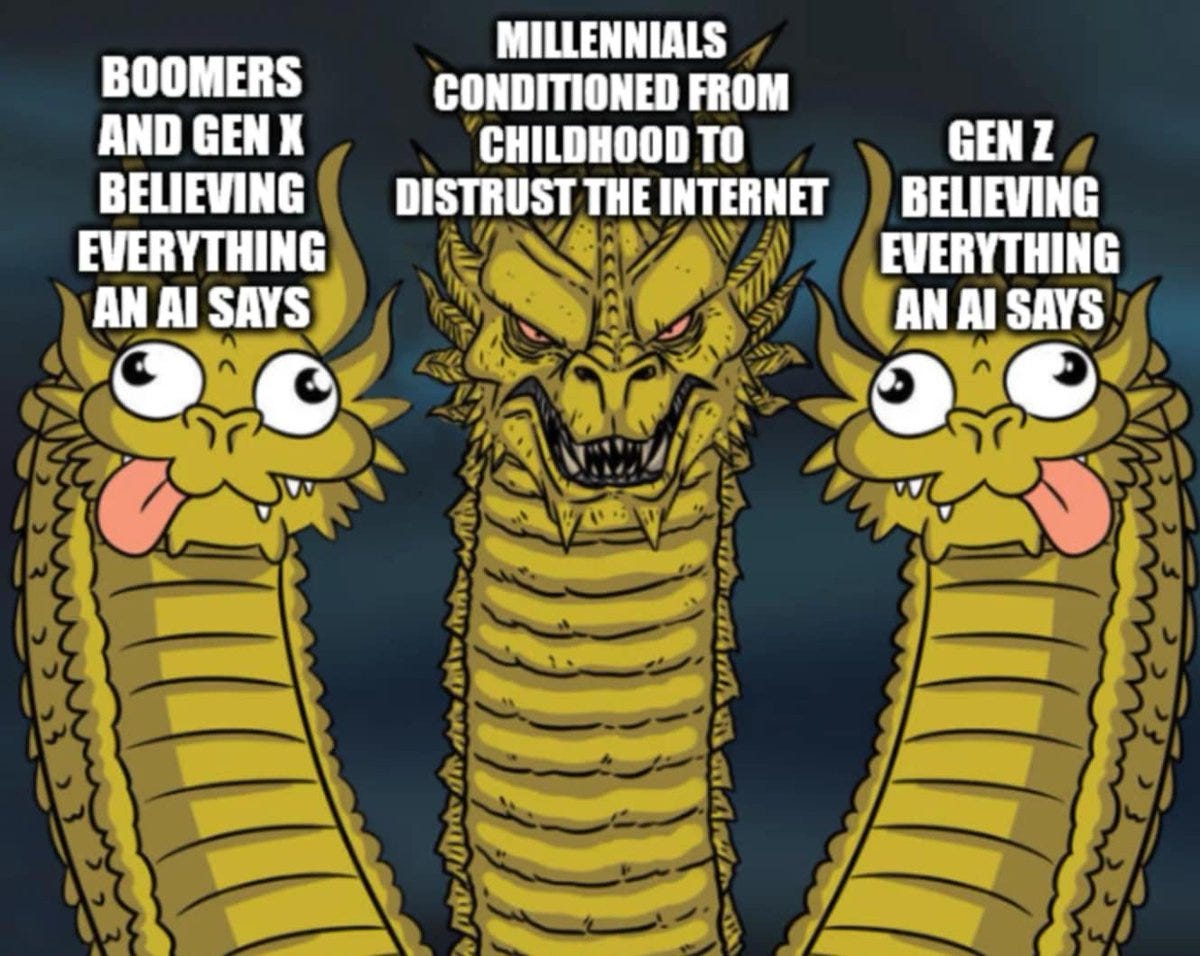

AI Helps us Sleepwalk into Social Isolation (12 min)

Disturbing findings from the Big Think.

Gen Alpha Can’t Read

Deeply concerning video. The study is very U.S. centric and hopefully doesn't apply to the rest of the world.

GenAI & Children

One in four children 8-12 are already using GenAI according to a LEGO Education study, which also highlights that a majority of neurodiverse children use it for self-expression. Read more.

If Artificial Insights makes sense to you, please help us out by:

📧 Subscribing to the weekly newsletter on Substack.

💬 Joining our WhatsApp group.

📥 Following the weekly newsletter on LinkedIn.

🦄 Sharing the newsletter on your socials.

Artificial Insights is written by Michell Zappa, CEO and founder of Envisioning, a technology research institute.