Before automating something... (004)

...ask yourself if it’s worth doing in the first place.

Happy Monday and welcome to Augmented Insights. This week’s edition was brought to you from the mountains of Hyrule between bouts of Zelda: Tears of the Kingdom, which leaves us with a short and sweet selection of Links about what to read and watch this week.

MZ

The urgent risks of runaway AI & what to do about them 🚦 (15 min)

Punchy TED talk by Gary Marcus about the urgent risks of the widespread use of AI, and how it can lead to misinformation, bias and further reshape democracies. Gary makes a distinction between symbolic and neural systems when it comes to developing truthful AI systems and the importance of estabilishing a global organization with governance and research. Follow Marcus’ Substack for more.

The nature of truth, reality & computation 🧮 (4h 15 min)

Lex Fridman interviews language and computing expert Stephen Wolfram and goes deep on the importance of symbolic representation, computational reducibility and the limitations of science in capturing the actual complexity of the world. So many nuggets of insight but this bit particularly resonated: “humans discovered computation but invented the computer”. Takeaway: understand the limitations of large language models and know which questions to ask.

Chatbots are not human… 🦜 (20 min)

…and you are not parrot. Linguist Emily M. Bender is worried what will happen when we forget this. Bender talks about the potential ramifications of blurring the lines between human and artificial intelligence and how easily we forget that LLMs do not understand language in the same we do.

Life After Language 💬 (10 min)

It’s obvious that the AIs can write better than 90% of humanity all the time, and the other 10% of humanity 90% of the time.

From Twitter

10 prompts to learn anything faster

Applicable approaches for going further with ChatGPT.

Emerging Vocabulary

Backpropagation

Short for "backward propagation of errors," is a widely used algorithm in training artificial neural networks which helps to adjust the weights of neurons based on the error rate obtained in the previous layer. In other words, backpropagation helps the model to correct its errors.

Project Showcase

Catbird.ai

Generate images from dozens of models at once: fifteen concurrent models are better than one.

SponCon

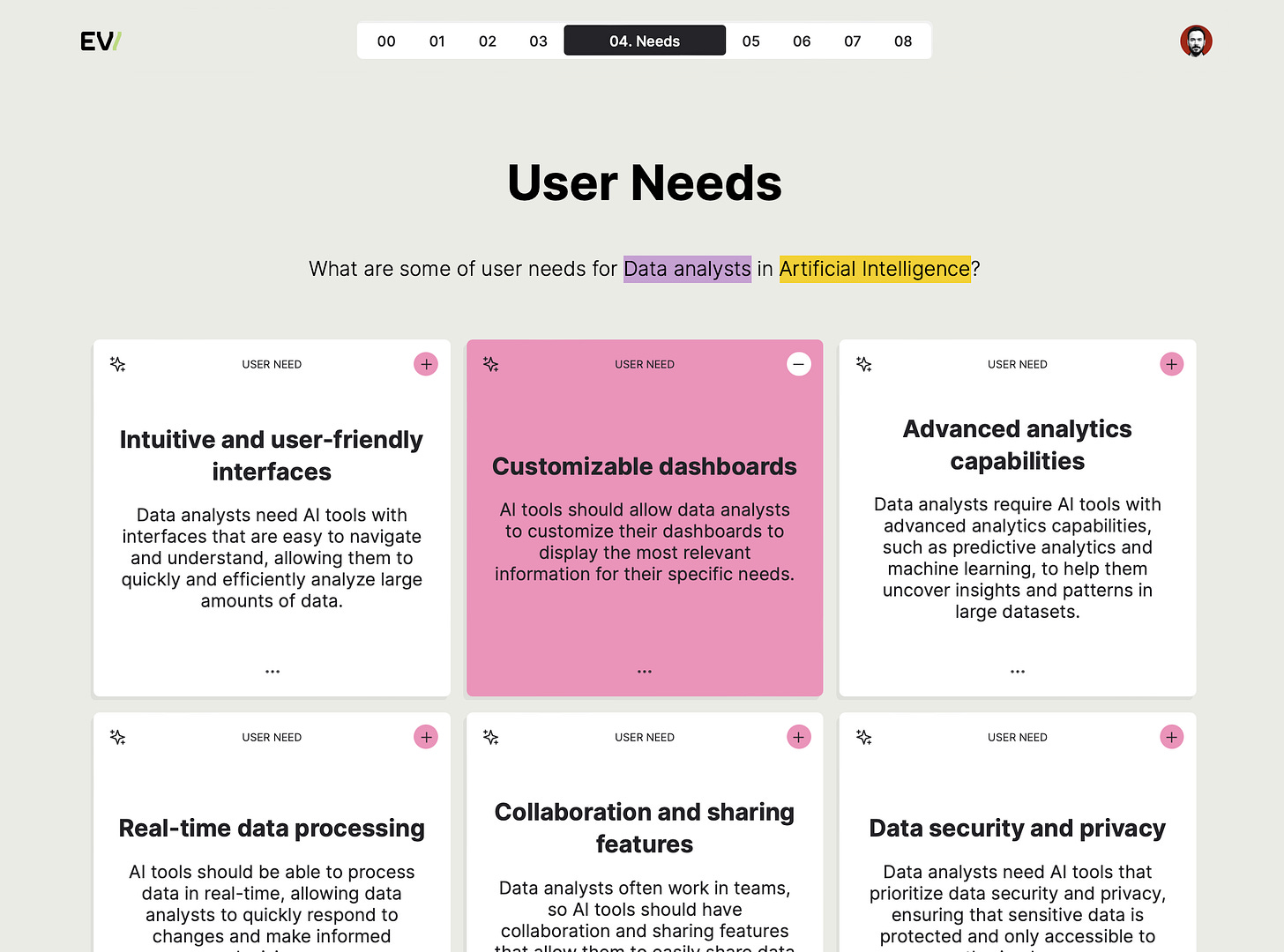

Envisioning Sandbox

Envisioning is developing a platform for facilitating workshops which uses artificial intelligence to augment the creative flow. We do this by combining a structured prompting language with an intuitive user experience. We’re hosting a public Sandbox demo later this month to present the platform and share early access links. More info to follow in coming weeks, but register today to learn more.

Technology & Culture

The cultural quandary of responsible AI

By Luma Eldin: AI debates often revolve around regulating AI and how to do so. It is hard to contest that AI should be designed and used in ways that empowers individuals and communities, strengthens the human connection, and promotes social and economic equity. Moreover, AI promises to foster cultural understanding, diversity, and creativity in a world that has lost these precious human potentials. While there is consensus that some form of regulation is necessary to ensure the responsible use of AI, opinions on what this should look like vary. Additionally, different cultural backgrounds and practices further complicate a global alignment on a unified regulatory approach.

If Artificial Insights makes sense to you, please help me out by:

Subscribe to the weekly newsletter on Substack.

Forward this issue to colleagues and friends wanting to learn about AGI.

Share the newsletter on your socials.

Comment with your favorite talks and thinkers.

Artificial Insights is written by Michell Zappa, CEO and founder of Envisioning, a technology research institute.

You are receiving this newsletter because you signed up on envisioning.io.