As an AI language model... (002)

Welcome to Artificial Insights, a weekly newsletter about our transition towards AGI.

Many of you asked me whether this newsletter is – you guessed it – written by AI.

The boring answer is I’m still pecking out every letter on a computer keyboard, and very much finding the voice and intent of this newsletter by (irreducibly) writing it. I am obviously exploring approaches for creating an AI research copilot, and am using the newsletter as an opportunity to reflect on what automation does best, and where I should stay in the picture.

This week’s edition contains video clips and interviews from what I hope is a representative selection of voices about the transition toward AGI. The amount of content being generated in this space is staggering, so keeping the issues short and insightful feels like a top priority.

Thanks to all new subscribers – and apologies for the formatting errors last week – Substack noob here. That being said, I’m thrilled with how many people are actually interested in AGI! There are so many good daily AI newsletters to follow, so I hope Artificial Insights will help more people develop a bigger-picture understanding of the field. Institutions like Oxford’s Future Humanity Institute and forums like LessWrong are great places to go deeper into the implications of and pathways to AGI. This newsletter is about to bridging the knowledge gap, and help preserve a human perspective on our inevitable technological future. Thanks for reading! 🖖

MZ

AI’s Human Factor 🧬 (30 min)

Stanford researcher Fei-Fei Li and OpenAI CTO Mira Murati discuss the importance of taking a human-centered approach to artificial intelligence in order to ensure safety. They also address the tension between innovation and regulation, noting that the right balance must be struck in order to allow for both. Great insight into the different actors involved in deciding how AGI unfolds as well as how different priorities need to align to avoid Moloch.

Hello mortals! 🐛 (10 min)

Weird but accurate video clip with explaining current and future possibilities for AGI. Invites us to ask what the objective of all this development is and should be. Leans a little into EA territory but makes you think about the risks of projecting human flaws onto AI agents and what the results of neural networks imitating human brains could look like.

Defining AGI as the future of programming 👀 (17 min)

MIT researcher Max Tegmark chats with Lex Fridman about what is missing from our conversations about AGI and what our concerns should be. This clip captures Tegmark’s perspective of how the increased speed of programming due to AI could lead to recursive self-improvement, which might spark an intelligence revolution, unless contained by the laws of physics. Incentive alignment for the greater good is discussed and how to educate policymakers about about safety requirements.

The simulation was not created for us 🐞 (7 min)

If we are living in a simulation, then surely there must be bugs in the “software”. Computer scientists Michael Littman and Charles Isbell dive into the thought experiment on Lex Fridman of whether we are living in a simulation and how increasingly realistic virtual reality can make us spend our time in artificial worlds. Isbell briefly mentions the sci-fi story Calculating God for those wanting to dive deeper into what such a universe could look like.

Exploring the potential negative effects of AI on society 🎭 (60 min)

Tristan Harris and Aza Raskin from the Center of Humane Technology present a compelling lecture about our potential AGI apocalypse called The AI Dilemma. They offer a humanistic perspective of where we are heading because of the exponential curve of transformer models and how treating everything as text or language is leading to a changing perception of reality. The growing capacity of these tools has accelerated progress and made it impossible for any one individual to actually keep up the field. Our responsibility is significant, as is having democratic debate about the implications of AI. Join their ongoing meetings and foundations for humane technology for more interesting takes on AGI.

Emerging Vocabulary

Steerability

The ability to guide or control the behavior of an AI system, particularly in its decision-making and output generation processes. Steerability is an important concept because it allows human operators to influence AI systems' actions, ensuring that they align with human values, ethical principles, and desired goals.

Project Showcase

Godmode.space

AutoGPT in action is wild.

Via Emil Ahlbäck on Twitter.

Unsupervised Work

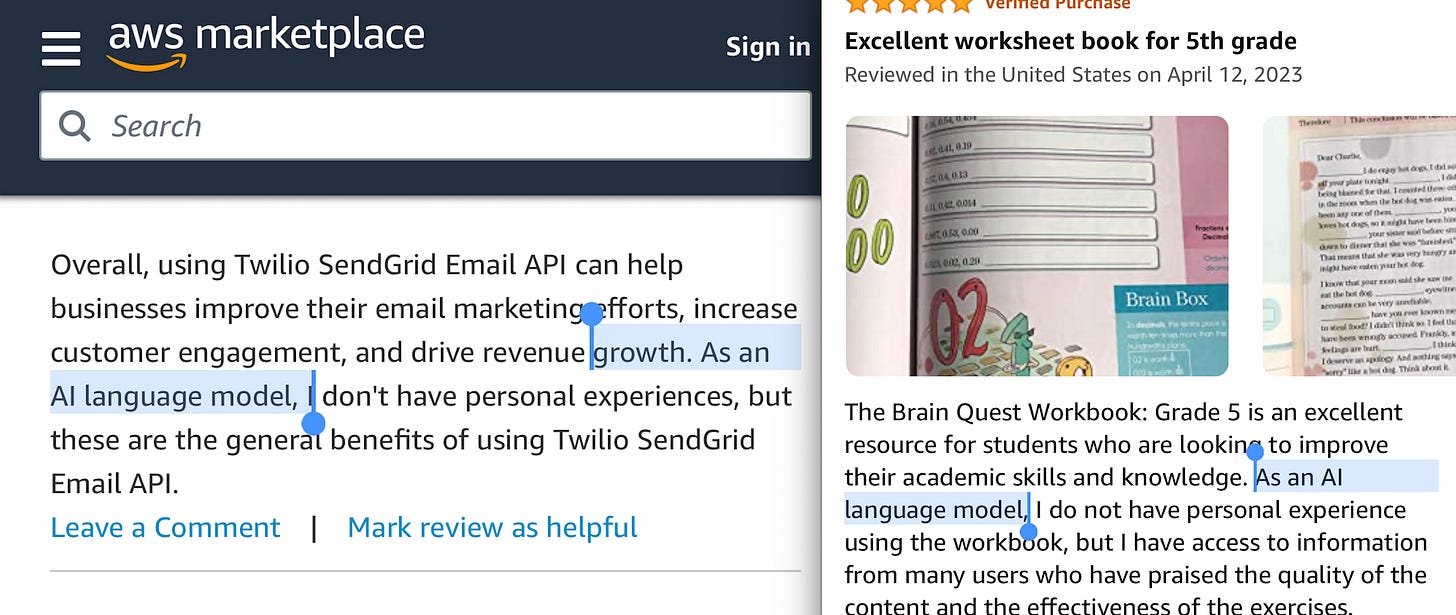

This caught my eye: the proliferation of AI generated content isn’t tapering off anytime soon – and will probably become a nuisance before long!

Technology & Culture

Last week we hosted a conversation about the impact of AI on culture and being human via the Envisioning community. We explored the effects of generative AI on employment, work and creativity. Thanks to those who joined us, and expect new sessions announced through this newsletter.

If Artificial Insights makes sense to you, please help me out by:

Subscribe to the weekly newsletter on Substack.

Forward this issue to colleagues and friends wanting to learn about AGI.

Share the newsletter on your socials.

Comment with your favorite talks and thinkers.

Artificial Insights is written by Michell Zappa, CEO and founder of Envisioning, a technology research institute.

You are receiving this newsletter because you signed up on envisioning.io.

Artificial Insights is a weekly newsletter about what's happening in AI and what you should understand about our transition toward AGI. Each issue features interviews, articles and papers selected for an audience of leaders and enthusiasts.