Are we in a bubble?

Will the levels of AI capability plateau around the skillset of average experts, and never go toward general or super intelligence?

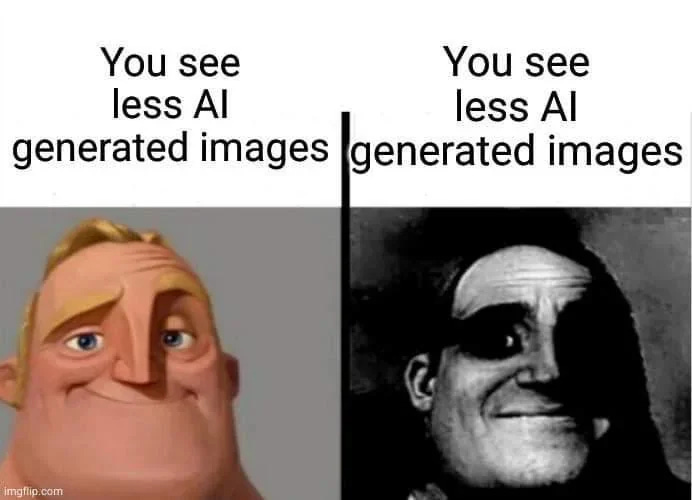

Those with insight into unreleased models are convinced we are only scratching the surface of possibility. You would expect this position regardless of capabilities, as much of the industry revolves around hype and future promises.

The gap between internal and public models is somewhere between 18-24 months, meaning that most AI you have access to today was tested internally around two years ago. Ask anyone with access to these models what they think the future holds, and they all circle back to the same ideas: AI risk is present and the potential benefits from developing it far outweigh any potential negative consequences. Sure. History tells us this is implausible. I for one hope we don’t end up with a handful of individuals owning the majority of our AIs, as is our experience with social media today.

Instead, the best we can optimize for is making sure AI is distributed and accessible to all. Many of us are learning quickly from what is developing and establishing best practices & guidelines for others to learn from. We should not expect everyone to become technically literate in ML but those who are have a responsibility of sharing and preparing those who are not. I am seeing more companies and public institutions working toward policies around responsible AI, which is reassuring. Will share some of these initiatives here as they go public.

On a personal level, that means investing more effort into publishing the framework I’ve been tinkering with the past year. I have a hypothesis about how we will reach AGI and have designed a ten-part framework to help those responsible of making strategic decisions around AI increase their technical understanding. You can find a recent 50-min recording of this presentation on YouTube. Would love feedback about this.

I’ll have a lot more to share around this framework in coming months. Thanks for being here for it.

I’ll be in Vienna this week for TEDAI. Let me know if you’re around.

We are hosting an online community meetup next week. Mostly informal and meant as an opportunity to introduce ourselves and learn about who is in the group.

Last week’s in-person meetup in Amsterdam was a blast! I’ll be organizing monthly AI salons in a similar format on the evenings of every first Thursday of the month, starting Nov 7. Links soon.

Until next week,

MZ

Nobel Prize in Chemistry winner Demis Hassabis (2h)

Today is a great day to listen to Demis on Lex from a couple of years ago. He talks quite a bit about protein structure prediction and Lex straight out says Demis will some day win the Nobel Prize.

Nobel Prize in Physics winner Geoffrey Hinton (45 min)

From the University of Toronto.

Inside NotebookLM (50 min)

Surely by now you have listened to a couple of Deep Dive podcasts from Google’s new NotebookLM. Here is a deep dive on its development with Raiza Martin senior PM @ Google Labs on Lenny's Podcast. Important to understand the magic of NotebookLM are the skills from Gemini (long context window, multimodal input, steerability, etc.)

AGI is not about scale, it’s about abstraction (45 min)

François Chollet, in his AGI-24 keynote, critiques the limitations of LLMs, emphasizing their strengths in pattern recognition versus weaknesses in logical reasoning and generalization. He introduces the Abstraction and Reasoning Corpus (ARC) to measure AGI progress and suggests that combining DL with program synthesis may lead to significant advancements. Via Guilherme.

Non-chatbot AI interfaces (4 min)

Cove looks like a smart approach for co-creating infinite canvases with AI. More on X.

Current LLMs are not capable of genuine reasoning.

Unexpected paper by Apple.

So You Want to Be a Sorcerer in the Age of Mythic Powers (2h)

Spectacular podcast episode about some of the possible spiritual consequences of creating and using AI. Fascinating.

Machines of Loving Grace (1h)

Long essay by Anthropic CEO Dario Amodei about the path from here to AGI. Started reading it last night and especially recommend looking at the implications he predicts.

I believe that in the AI age, we should be talking about the marginal returns to intelligence, and trying to figure out what the other factors are that are complementary to intelligence and that become limiting factors when intelligence is very high.

The essay title refers to a haunting poem by Richard Brautigan. Thanks Iolanda!

My First AI

This is so cool. How will kids first experience AI, today and in the future? Learned about it through the City of Amsterdam's Design team as part of their research on civic AI. Haven't seen anything like it before. Thanks Leonore Snoek!

Exploring latent spaces

Short clip via poetengineer__ on X.

If Artificial Insights makes sense to you, please help us out by:

📧 Subscribing to the weekly newsletter on Substack.

💬 Joining our WhatsApp group.

📥 Following the weekly newsletter on LinkedIn.

🦄 Sharing the newsletter on your socials.

Artificial Insights is written by Michell Zappa, CEO and founder of Envisioning, a technology research institute.

You are receiving this newsletter because you signed up on envisioning.io or Substack.