A copy of a copy of a copy (053)

Your weekly guidebook to augmented incoherence.

Happy Monday and welcome to your weekly guidebook to the journey of personal transformation in an age of augmented incoherence.

Machine learning is not a new endeavor - and while the dream of autonomous cognition has taken on many forms over the last centuries and decades - something goes to be said about the moment we are sharing right now. Sci-fi geeks and technology enthusiasts spent decades questioning when machines would eventually surpass the Turing Test - and it turns out sometime between GPT3.5 and GPT4 we did, and nobody really noticed. We used to think that having an AI masquerade as human would be a monumental transition, while in reality it had virtually no impact on our perception of AI capabilities, and we quickly moved on to other more interesting (and important) problems.

This mismatch between perception and reality is what I find fascinating with emerging technology, in how quickly it rewires our expectations of the world, while leaving almost no trace about how we used to think about the world before a certain technology came into our lives.

While avoiding senseless hype, I am also entirely convinced that the current wave of AI techniques and applications, coupled with an unimaginable amount of investment and academic research, means that we are barely scratching the surface of what this technology will be capable of. Yes, it will change our organizations, institutions and maybe even personal lives. How exactly this will take place is a matter of careful observation and quick response, but we are barely begin to comprehend what lies behind the phase transition we’re all experiencing.

Maybe that is why the drama-of-the-week over at OpenAI doesn’t really bother me. Sam Altman turned internet darling with their rapid ascent and lowercase posts - and it’s no secret OpenAI are beholden only to shareholder value, not humanitarian ones. Rapid valuation in an indeterminate industry is virtually a guarantee of chaos and misaligned expectations between stakeholders. Let’s just hope they don’t ruin the horizon for the rest of us.

Until next week,

MZ

P.S. if you made it this far you should join our WhatsApp Group.

Thanks, Greg!

Combinatorial Innovation at Scale

Although this presentation isn’t strictly about AI, the emerging technology transformation India is going through cannot be ignored. They recently leapfrogged the world with transaction and identity tech, which puts them in a unique position to implement nation-wide AI solutions at scale.

In May of 2016, we launched UPI... by October 2016, we had a hundred thousand UPI transactions a month, and today we have 9 billion.

From Pre-training to Post-training and Beyond

Insightful in-depth interview with OpenAI co-founder John Schulman about the evolving edge of ML capabilities. Technical but super interesting.

I expect that models will be able to use websites that are designed for humans just by using vision, after the vision capabilities get a bit better... But there will be some websites that are going to benefit a lot from AIs being able to use them.

From Darkness to Sight: The Future of AI with Spatial Intelligence

In this presentation, Dr. Fei-Fei Li explores the evolution of vision and its impact on intelligence, paralleling it with advancements in AI.

Spatial intelligence links perception with action. If we want to advance AI beyond its current capabilities, we want more than AI that can see and talk. We want AI that can do.

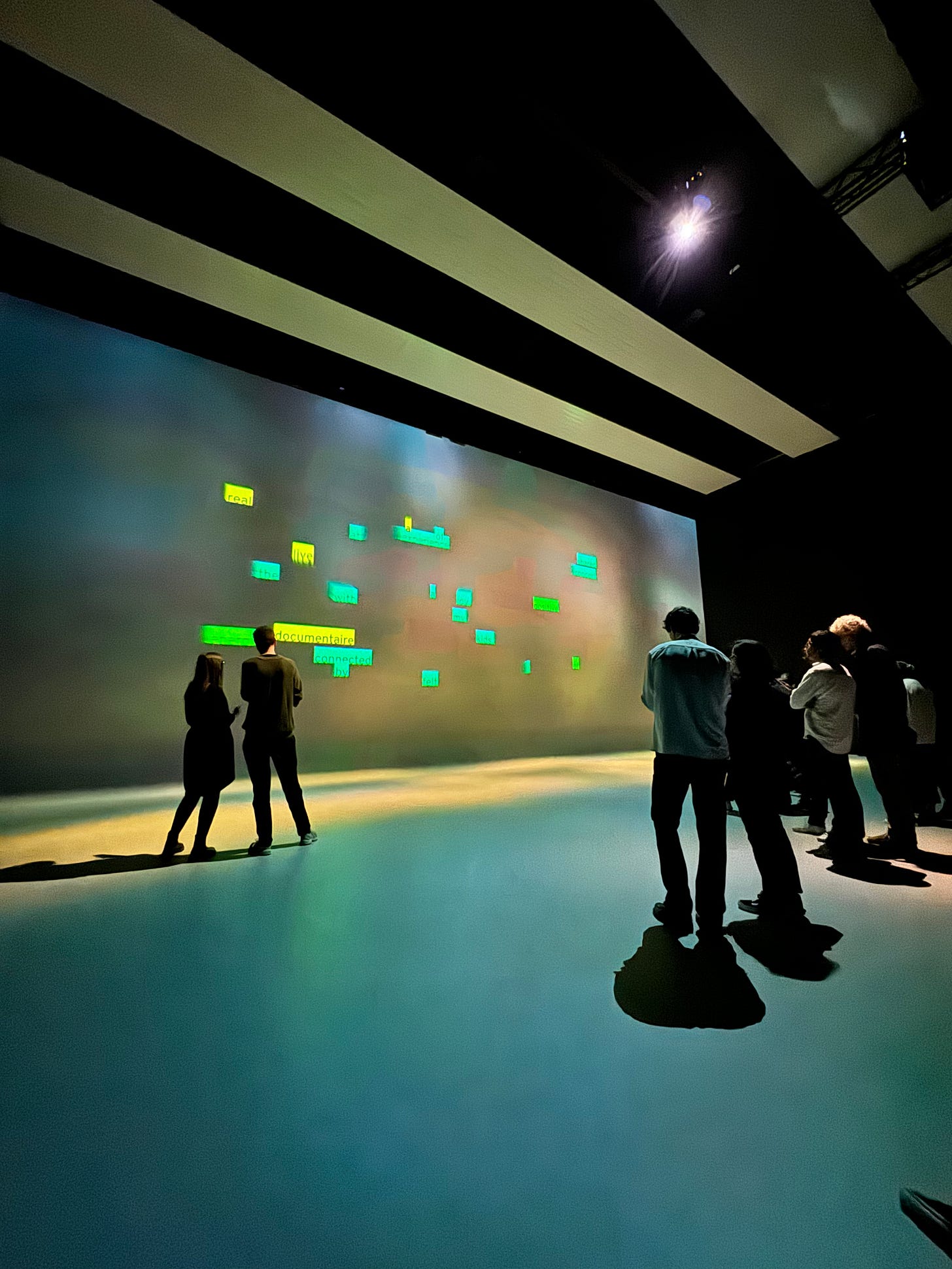

Interactive generative experience

Last week, design studio Tellart extended an invitation to a private interactive AI experience at the NXT Museum in Amsterdam, which I happily accepted.

The premise of the experience was around generating an immersive story, with imagery, narrative and ambient music based on the inputs of the participants in the room. While the experience was framed as a quick pilot from the studio, the technical prowess on display was impressive, and the audience gasped and awed with what was created for us.

We were asked to log in to the museum’s Wi-Fi and access a web app via QR code, and each used our phones throughout the process. The bulk of the experience revolved around interacting with “SAM”, a generative intelligence which chatted with each participant individually and collated their inputs into a collective output throughout the hour we spent together. First by representing our inputs as a floating tag cloud, and then with a generative video clip and story narrated by SAM which incorporated snippets and aspects of our different stories into its grand narrative.

What stood out to me - other than the competent technical implementation - was how positively the audience reacted. Using personal data is obviously a contentious issue, which Tellart joyfully explored by tracing the dialogue around an AI wanting to learn about its audience, which landed surprisingly well.

I hope they offer similar installations in the future, and will let you all know if they do!

The interactive storytelling installation invites the audience to reflect on the intersection between AI and humanity, creativity and nature. In the experience, guests will answer some questions on their phone and the generative AI will create a cinematic world of the future on the big screen, bringing personal reflections to life in a unique video narrative, one time only. The experience will encourage us to consider the impact of our daily choices related to themes like food, self and the environment. And more deeply, it will make us question the value and ethics of AI for the future.

Thanks, FR!

If Artificial Insights makes sense to you, please help us out by:

📧 Subscribing to the weekly newsletter on Substack.

💬 Joining our WhatsApp group.

📥 Following the weekly newsletter on LinkedIn.

🏅 Forwarding this issue to colleagues and friends.

🦄 Sharing the newsletter on your socials.

🎯 Commenting with your favorite talks and thinkers.

Artificial Insights is written by Michell Zappa, CEO and founder of Envisioning, a technology research institute.

You are receiving this newsletter because you signed up on envisioning.io or Substack.